6 Tips for AWS Cost Optimization

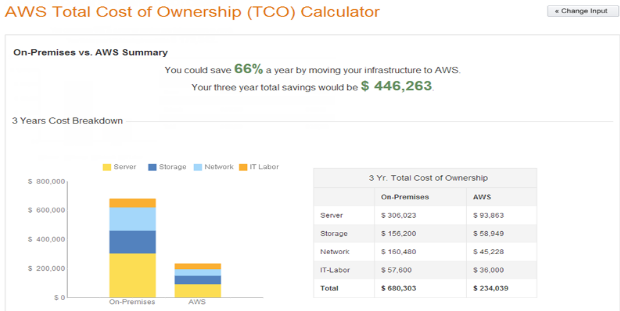

As we know AWS provides a good edge in terms of cost over the on-premises data center or in a co-location environment with its On Demand and Reserved pricing. As it is rightly said “Reducing the overall cost is a high priority” and it is true for any organization whether big or small.

By using AWS we can lower the IT costs, compute costs, storage as well as caching costs. But what after that, after we start using AWS how can we still optimize our costs. This is the agenda in writing this blog.

While using the AWS services we often become careless and instances are left running or volumes are unattached or some other resources are kept idle which lead to increase in the overall bill. To avoid such situations or carelessness, AWS provides us with a service called AWS Trusted Advisor(TA) available with the business support plan. It calculates the cost which is generated due the unused or underutilized resources and recommends corrective actions.

But AWS Trusted Advisor comes under the Business Support Plan which is chargeable. The pricing of Business support is either 10% of the overall bill incurred or 100$, whichever is higher. So basically, to save some costs, you might need to shell out some money.

While working with some different AWS accounts, I found that big players easily opt to go with Trusted Advisor as it gives benefits over and above 10% of the savings. However, TA is not famous among small players and hence they rely on their own manual checks. So, to avoid such costs and manual efforts,

But before that, let me touch those areas first which are the basis of this discussion. The idea of calculating the idleness of the resources is in line with AWS best practices, though you will have that flexibility to mold the values according to your need.

This script tells us about the underutilized or unused resources. The script can be used to avoid unnecessary cost which occurs while working with AWS and yes they are free !!

This script consists of 6 functions. We will understand them step by step below:

Note: All the functions below will work in all the regions.

1. Low Utilization EC2: The first and foremost resource to check is compute. You must check for over-provisioned instances or which have very less utilization trend. The function calculates the average CPU Utilization and Network I/O of all the instances for the past 14 days. The CPU Utilization should be less than 10% and the network I/O should be less than 5 mb for any 4 of the last 14 days. Both conditions should be true for declaring the instance in the low utilization mode. However, you have put your own values to decide about the idleness.

[code]#Using cloudwatch to generate the metric statistics

cpuutil=mon.get_metric_statistics(86400,datetime.datetime.now() – datetime.timedelta(seconds=1209600),datetime.datetime.now(),"CPUUtilization",’AWS/EC2′,’Average’,dimensions={‘InstanceId’:str(b[i].id)})

networkout=mon.get_metric_statistics(86400,datetime.datetime.now() – datetime.timedelta(seconds=1209600),datetime.datetime.now(),"NetworkOut",’AWS/EC2′,’Sum’,dimensions={‘InstanceId’:str(b[i].id)})

networkin=mon.get_metric_statistics(86400,datetime.datetime.now() – datetime.timedelta(seconds=1209600),datetime.datetime.now(),"NetworkIn",’AWS/EC2′,’Sum’,dimensions={‘InstanceId’:str(b[i].id)})

for i,j,k in itertools.izip_longest(networkout,networkin,cpuutil):

outbytes=outbytes+i.values()[1]

inbytes=inbytes+j.values()[1]

total=outbytes+inbytes

cpu_util=k.values()[1]

print cpu_util

#Checking if the cpu utilization is less than 10% and the network iops are less than 5 mb

if cpu_util < 10 and total < 5242880:

data=[r.name,str(b[i].id),"Low","Low CPU Utilization and Low Network Input/Output rate"]

csvwriter.writerow(data)

n=n+1

if n >= 4:

print "Less Utilization"

print n

[/code]

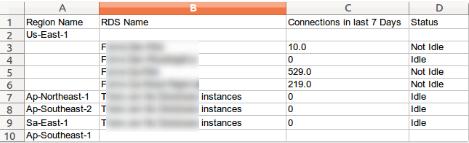

2. RDS Idle DB Instances: Database instances are costlier than a normal EC2 instance and their idleness will be costlier. The function checks whether all the RDS instances are getting enough DB connections or not. If the RDS don’t have the desired DB Connections in the last one week then it’s getting wasted and should be given a check.

[code]

for db in dbins:

#Getting the statistics for DB Connections over the past 1 week

d=mon.get_metric_statistics(600,datetime.datetime.now()-datetime.timedelta(seconds=604800),datetime.datetime.now(),"DatabaseConnections",’AWS/RDS’,’Sum’,dimensions={‘DBInstanceIdentifier’:[str(db.id)]})

for j in d:

z=0

z=z+j.values()[1]

if z > 0:

data=["",str(db.id.title()),z,"Not Idle"]

csvwriter.writerow(data)

else:

csvwriter.writerow(data)

data=["",str(db.id.title()),"0","Idle"]

[/code]

3. Underutilized Amazon EBS Volumes: Elastic Block Store volumes which come pre-attached with an instance are usually the boot devices which are deleted once Instance is terminated. However, these EBS volumes even with stopped instance incur some charges. The function lists all the EBS volumes in all the regions and checks whether the EBS is attached to an instance or the EBS has had no IOPS in the last 1 week. The cost of EBS is based on provisioned storage and no of IOPS per month. This also varies according to the type of the volume to be used.

Ex: For EBS General Purpose SSD the cost id 0.10$ per GB-month i.e.

For 20 GB = 20 * 0.10= 2.0$ Per Month

For EBS Provisioned IOPS SSD the cost is for both storage as well as IOPS i.e.

For 20 GB = 20 * 0.125 + 0.065*(No.of IOPS)

[code]

#Listing the metrics using cloudwatch for Network IOPS

read=mon.get_metric_statistics(86400,datetime.datetime.now() – datetime.timedelta(seconds=604800),datetime.datetime.now(),"VolumeReadOps",’AWS/EBS’,’Sum’,dimensions={‘VolumeId’:str(a.id)})

write=mon.get_metric_statistics(864600,datetime.datetime.now() – datetime.timedelta(seconds=604800),datetime.datetime.now(),"VolumeWriteOps",’AWS/EBS’,’Sum’,dimensions={‘VolumeId’:str(a.id)})

#Running 2 loops simultaneously

for j,i in itertools.izip_longest(read,write):

try:

z=z+j.values()[1]

x=x+i.values()[1]

total=z+x

break

except TypeError:

print r.name,str(a.id),"has an error"

if total > 7:

print "IOPS are more than 7 in past 1 week",str(a.id)

else:

print "IOPS are less than 7 in past 1 week for volume:",str(a.id)

data=[str(r.name),str(a.id),"Idle","IOPS are less than 7 in past 1 week"]

csvwriter.writerow(data)

else:

print "No Volumes in region:",r.name

[/code]

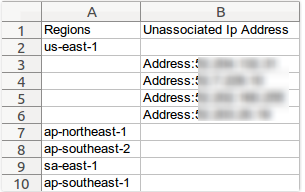

4. Unassociated Elastic IP Addresses: As we know the IP (version-4) are getting exhausted really fast. So we need to use the IP’s carefully until we start adopting the IPv6 class. The cost of using an elastic IP occurs when the IP is generated and it is not attached to any of the instances i.e. its left unassociated. This function gives a list of all the IP’s that are left unattached.

[code]

for address in addresses:

ins_id=address.instance_id

#Checking if the IP has an address or not

if (ins_id==None):

data=["", address]

csvwriter.writerow(data)

[/code]

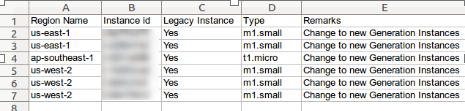

5. Legacy Instance Type Usage: Legacy instances are the old class of instances which were provided until the new generation instances were released. The new generation instances have a higher performance compared to the legacy ones as well as new generation instances are also cheaper than legacy ones for the same configuration. This funtion lists all the instances which are running using the legacy instances and also the class of the instance and recommends replacing them with new generation instance.

[code]

data=[str(reg.name),str(d.id),"Yes",str(e),"Change to new Generation Instances"]

#Checking the instance type

if c == "t1" or c == "m1" or c == "c1" or c == "hi1" or c == "m2" or c == "cr1" or c == "hs1":

csvwriter.writerow(data)

[/code]

6. Idle Load Balancers: Next, we should be checking the Elastic load balancers which are lying idle. Any ELB that is created and configured will accrue charges. The function will list all the ELB’s which are either not attached to an instance or which don’t have a healthy instance or the no. of requests to the load balancer is less than 100 in the past 7 days.

[code]

#Listing all the statistics for the metric RequestCount of Load Balancers

d=mon.get_metric_statistics(600,datetime.datetime.now() – datetime.timedelta(seconds=604800),datetime.datetime.now(),"RequestCount",’AWS/ELB’,’Sum’,dimensions={‘LoadBalancerName’:str(e.name)})

for j in d:

z=0

z=z+j.values()[1]

if z > 100:

print "The",str(e.name),"is not idle for instance",e.instances[i]

else:

print "The",str(e.name),"is idle for instance",e.instances[i]

data=[str(r.name),str(e.name),e.instances[i],"IDLE","Number of Requests are less than 100 for past 7 days"]

csvwriter.writerow(data)

else:

print "The instance are Out of Service",str(e.instances[i])

data=[str(r.name),str(e.name),e.instances[i],"IDLE","Instance are Out of Service"]

csvwriter.writerow(data)

[/code]

These all 6 use cases are combined in a single script which delivers the output in a CSV file. Download the script from here.

The above-mentioned script should be run on a regular basis so as to avoid any unnecessary cost on the bill.

Prerequisites for running the script:

- Boto (Version=’2.40.0′) should be installed in the system and configured

- AWS Account (Access Key Id, Secret Key) with READ ONLY access.

- To review the created CSV file, a xlwt package is required to be installed

Run the script by python Cost_Optimization.py

Note: The Script will take about 20 minutes to execute.

The output will be somewhat like:

Hope this was useful. I will be coming up with more such use-cases.

I have ran the script, all data captured in excel sheet except Low cpu utilization.

Hi Kamal,

I’ll check the script for that and update it to you.

Low EC2 instance utilization is not captured. The error is given below

data=[r.name,str(b[i].id),”Low”,”Low CPU Utilization and Low Network Input/Output rate”]

TypeError: list indices must be integers, not Datapoint

Useful Blog, Would like to test it in real scenario