Create Extended EBS Backed LVM Volume on EC2

Sharing one of my use-cases, Jenkins has been increasing over past few weeks, and we were about to hit the 50GB capping for Elastic Block Storage volumes on the Amazon EC2. And this is a problem that is affecting a lot of our team developers to test their build packages on the different environments. But, every time we have to increase it, a maintenance/downtime window has to be scheduled where the Jenkins server service is stopped, an EBS snapshot of the data volume is created and a newer volume with increase capacity is created and attached to the instance. And the network performance bottlenecks between EC2 instances and the EBS volume that generally impact the complete system performance.

To fix these issues, we decide to leverage existing Logical Volume Managers(LVM) feature which provides the option to easily expand the size of their volume by adding one or more EBS volumes. With multiple EBS volumes, network performance is increased between AWS resources like EC2 instances and EBS volumes.

In my post, I will walk through the procedure of setting up with the LVMs on Ubuntu in the AWS EC2 environment, and some time period for the maintenance to add and remove (where possible) storage to avoid the interruption or any downtime.

Getting Started

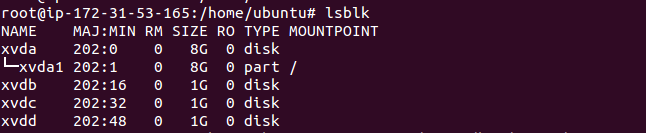

- In my case, we are attaching three EBS volumes with 1GB SSD . The EBS volumes are given the following device names: ‘/dev/xvdb, ‘/dev/xvdc’ and ‘/dev/xvdd’. (To Make LVM utilities installed):

- The first step, launch a base image Ubuntu EC2 instance launched with the IAM Role/Profile; the root file system is an EBS Volume.

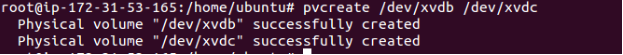

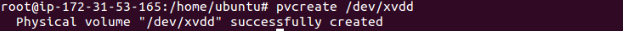

- Initialize “pvcreate” on the additional volumes to use with LVM filesystem. In order to do this, we need to use the “pvcreate” command. And “pvcreate” command is mainly used to initialize disk or partitions that will be used by LVM. It can either initialize a whole disk or a partition on the physical disks.Syntax so “pvcreate <device-a> <device-b> <device-c> <device-n>”

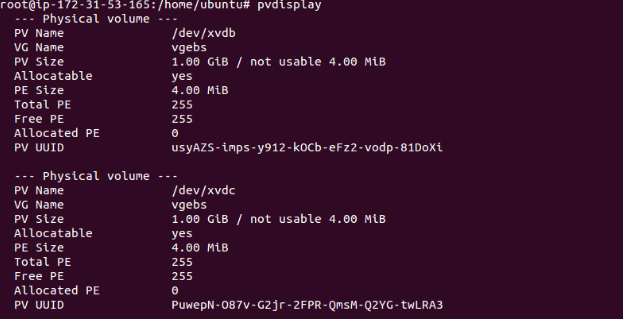

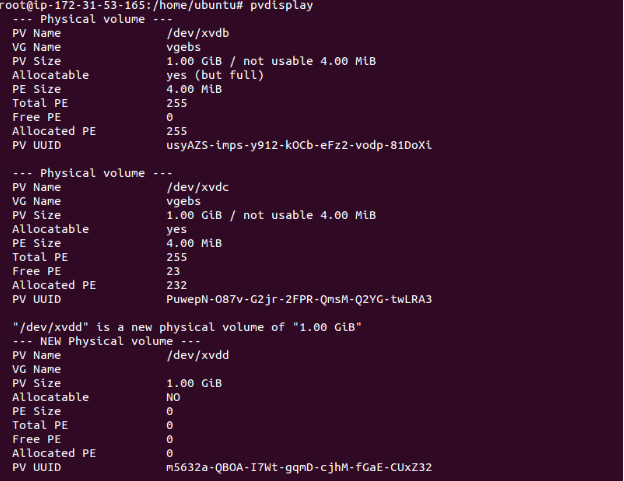

- Use the “pvdisplay” command utility to display information about mount physical disks:

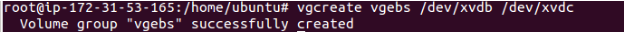

- Then volume group called “vgebs” is created. This volume group includes our two disks:

- Once the LVM volume group is created, use the “vgdisplay” command to show its attributes.

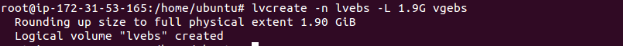

- Create Logical Volumes. Once the LVM volume group is created, now it’s time to create logical volumes Syntax: sudo lvcreate –name <logical-volume-name> –size <size-of-volume> <lvm-volume-name>

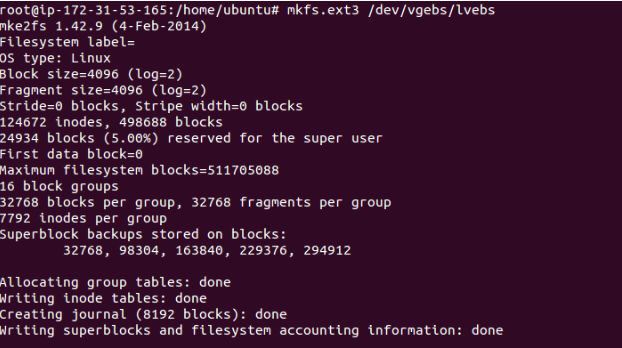

- This will create a new device located at “/dev/mapper/vgebs-lvebs”. And this is our LVM volume, and we can now create a filesystem and mount it. Once the LVM volumes are created, then we can format them using any another type of filesystem like ext3, XFS, ext4 and more. If you are using the filesystem”ext3″ :

mkfs.ext3 <logical-volume-path>

- To mount the logical volumes using the mount command:

- If we want then mount points to be available after reboot the system, we can add mount point entries in file ‘/etc/fstab’.And check the status using mount volume:

- Our first step is to create two more EBS volumes (1GB each) and attach to AWS ec2 instance. And now we are running out of disk space on EBS for our mount point? So we need to increase it out by now we initialize this as the Physical volume for the LVM. After the new volumes have been attached to our EC2 instance we check “dmesg” to check the exact mapping (EC2 instances may sometimes change the name of the devices):

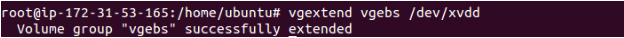

- And then add this disk to our existing “vgebs” Volume Group:

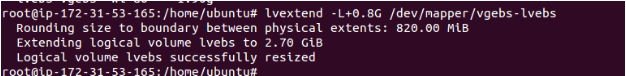

- Now we extend the LVM volume to use the whole size of the group. Please note that in theory, we should “lvextend” to use 800Mb or 0.8G, but since the LVM needs to reserve some space for internal data we must leave a few GiB available:

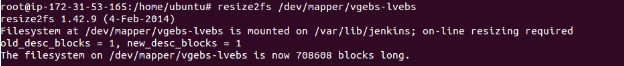

- We can now use “resize2fs” to extend the filesystem until the end of the LVM volume:

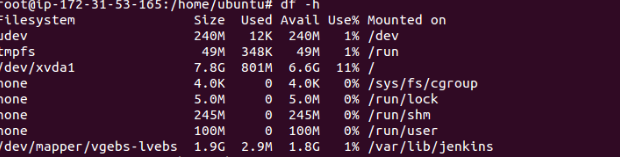

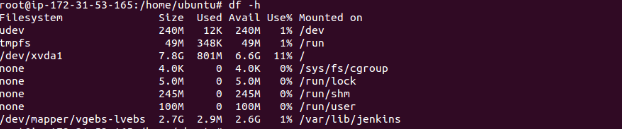

- Check the status of the mount point “/var/lib/jenkins”. Then we will find that the disk size of the device “/dev/mapper/vgebs-lvebs” increased to 1.9GB to 2.7GB:

Once the LVMs are created and mounted, we can use these as normal volumes. However, LVM offers:

- With Better performance – If data is spread across all the multiple EBS volumes using LVM, we can leverage the dedicated network throughput between AWS EC2 and EBS. This provides us better network throughput over a single network channel between EC2 instances and EBS volumes.

- Ability to grow – We also expand volume at any time according to requirement. More EBS volumes can be added to existing LVM volume instead of creating a snapshot of an EBS volume and expanding it.

- The EBS volume snapshots – Also, need to ensure that on disk there are no operations happening on EBS volume during snapshots. Also to suspend the operation (Read or Write) on the LVM volume by using “dmsetup” command.

Syntax for the suspend : # dmsetup suspend <lvm-volume-name>

Syntax for the resume : # dmsetup resume <lvm-volume-name>

As mentioned in my post, LVMs volume backup can be created using the EBS snapshot procedure, but need to ensure that LVM volume operations are suspended for that time duration .

Hope my blog helps you in understanding the concept of the extended EBS backed LVM volume.

Assuming you’ve been taking EBS snapshots per the recommended steps above – after suspending the logical volume – really curious to know how you’d recover if you have 3 ebs volumes that make up your volume group and one of the volumes failed? Since the data is LVM-managed, would solely restoring the snapshot be enough to recover?

Hi Ankit,

What if I have already mounted /dev/xvdf to /mnt.

/dev/xvdf is an EBS volume of 50GB.

But I had simply attached it and not run any pv commands and all.

Now, if I am running out of space, how will this help? I will have to create a new volume. I don’t see any other way.

stop instance, create snapshot of ebs volume. from snapshot create new volume of your size. deattach the old volume and attach the newly created vol. start the instances