From Chaos to Clarity: How We Fixed Jenkins Without Starting Over

Introduction

At To The New, we help customers across various industries develop cutting-edge, scalable infrastructure. However, you must first tame the beast before you can scale anything. And in this case, the beast was Jenkins. Jenkins setups that have grown disorganized over time are a problem for many organisations. Engineers spend more time troubleshooting than building, pipelines fail silently, and jobs, scripts, and secrets are dispersed among teams. This is a problem that affects the entire IT industry, not just one client. Although CI/CD systems are intended to speed up software delivery, they can easily become broken, dangerous, and choke creativity if they are not properly structured.

Jenkins

Jenkins: The Client Story

We were brought in to help a client who had been relying on Jenkins for years. With each team contributing their jobs, configurations, secrets, users, and scripts, it had gradually become a mess. It was barely holding together, but somehow it was working. Imagine a house being constructed room by room without any blueprints, without any labels on the wires or pipes, and without anyone knowing what goes where. That’s what their Jenkins looked like.

In this blog, I’ll discuss how we helped them transition from a messy to a clean, secure, and modern CI/CD system without breaking production or forcing teams to rewrite everything from scratch.

Problem Statement

Before making any changes, it’s important to understand what we are dealing with. Furthermore, it was quite overwhelming, to be honest:

- 400+ Jenkins jobs that were created manually without any structure or proper documentation

- Hundreds of freestyle jobs with inline shell scripts, most of them written inconsistently by different engineers.

- Missing version control – lots of Jenkins pipelines only existed inside Jenkins, editable only from the UI. So if Jenkins

- Hardcoded secrets – Including production database passwords sitting right inside job configurations and visible on Jenkins console output.

- No logging, no notifications, no audit trail — if a job failed silently at 2 am, no one knew until the next morning

- Centralized admin credentials were being used for logging into Jenkins. No Security measures.

Our Approach: Improve Gradually, Without Disrupting Delivery

We knew we couldn’t just recreate everything and start over. That would be slow, risky, and difficult to gain support for. Instead, we concentrated on making small, gradual improvements to make sure nothing broke during production.

We aimed to increase clients’ confidence while keeping speed, security, and maintainability.

Step 1: Discovery Phase

Discovery is an important phase in each project. So we started by exporting every single Jenkins job and going through them one by one. It was slow work, but worth it.

- For each job, we asked:

- Is this job still being used?

- Who’s responsible for it (if anyone)?

- What does it do?

- And is it even working?

This gave us a clearer picture of the Jenkins setup than anyone had seen in years.

And honestly, it was eye-opening. We discovered more than 100 jobs that hadn’t run in over a year — some completely broken, others with no identifiable owner. Some were duplicates, providing the same functionality in a slightly different way. Removing those allowed us much-needed breathing room and decreased the possibility of future unintentional breakages.

Step 2: Moving the Right Jobs to Git

Once we had a full inventory of Jenkins jobs, we focused on the ones that mattered — the pipelines that directly impacted production or were tied to business-critical workflows. We didn’t try to move everything at once. Instead, we handpicked the top jobs and refactored them into Jenkinsfile pipelines, stored and versioned in Git. That simple lift and shift alone made a big difference. For the first time:

- Teams could raise pull requests to review pipeline changes.

- The build logic was no longer trapped inside the Jenkins UI.

- Pipelines became consistent and transparent — no more guessing what was happening behind the scenes.

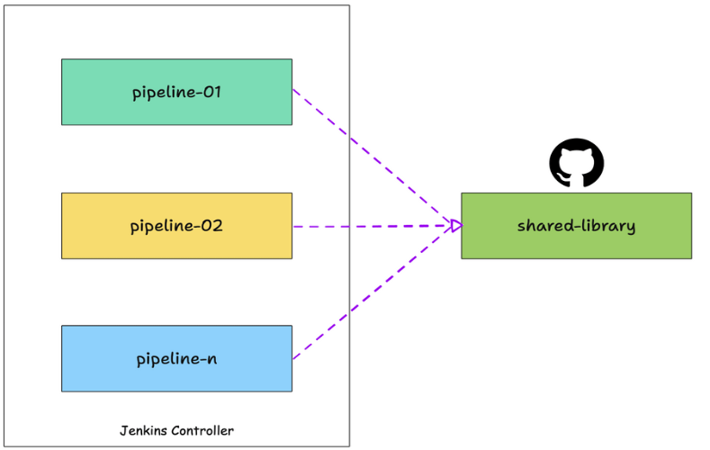

- Over time, as more teams adopted this approach, we saw a pattern in the steps most jobs followed — building, scanning,testing, notifying, deploying, and uploading artifacts. So we created a shared Jenkins library to handle all that common logic.

shared library

- Now, teams didn’t have to write the same code logic again and again — they could just use battle-tested functions that worked the same way across the board.

Step 3: Security In, Security Out

One of the biggest risks we spotted during the discovery phase was how secrets were being handled in jobs. Many jobs had sensitive credentials, even production database passwords, hardcoded in plain text. It wasn’t just unsafe; it was a compliance nightmare waiting to happen.

We tackled this in two ways:

- Integrated Jenkins with AWS Secrets Manager, so credentials could be pulled securely at runtime.

- Enabled role-based access control (RBAC) — engineers could run pipelines, but only a select few could view or update sensitive configs.

These changes made the Jenkins setup far more secure and gave our security team much-needed peace of mind. Now, when someone asked, “Who has access to this?” — we had an answer.

Step 4: Built a Self-Service Onboarding Template

We knew we couldn’t be the bottleneck. So we created a plug-and-play Jenkins pipeline template with:

- Docker-based agents for consistent build environments

- Teams and email notifications are built in.

- Git commit metadata for better traceability.

- Artifact uploads to S3

- Optional deployment logic

Teams could now onboard new services in under 30 minutes, without needing help from DevOps every time.

Step 5: Cleaned House and Organized Jenkins

Once we had critical jobs migrated and new standards in place, we turned our attention to cleanup:

- Archived unused jobs

- Deleted truly dead ones

- Introduced a folder structure based on environments.

- Standardized naming conventions (e.g., team-appname-environment)

- Added labels and descriptions for every job

The Jenkins UI, once chaotic, now looked like a proper dashboard. Anyone could find what they needed without guessing.

The Results: 3 Months Later

3 months into the transformation, here’s what we achieved:

- Over 70% of jobs migrated to version-controlled pipelines

- All secrets have been moved to AWS Secrets Manager.

- Jenkins’s job failures dropped by 60%

- New service onboarding time cut by two-thirds.

But more importantly, the DevOps team regained control. Jenkins went from being a fragile, scary black box to a platform teams could trust and work confidently.

What We Learned Along the Way

We walked away with some valuable lessons:

- You don’t have to throw Jenkins away. If it’s working for your team, modernize it step by step.

- Version control isn’t just about application code — your CI/CD logic needs it too.

- A shared library is worth the effort. It enforces consistency, speeds up changes, and reduces duplication.

- CI/CD transformation isn’t a tooling problem — it’s a mindset and discipline shift.

- Proper structure and documentation are equally as crucial as pipelines. Your CI/CD shouldn’t be treated like disposable code.

Conclusion: Resolving the Bigger Problem

Apart from fixing one messy Jenkins setup, this approach helps organizations to:

- Reclaim control over CI/CD pipelines

- Reduce security and operational risk

- Enable quick, more reliable software delivery

- Scale DevOps practices safely across multiple teams

- Modernizing CI/CD is about making pipelines reliable, secure, and noteworthy so teams can focus on innovation instead of troubleshooting issues.

Wrapping Up

If your Jenkins setup feels like it’s just one misclick away from disaster, you’re not alone. Many companies start with Jenkins, and over time, it turns into a mess. How you react is what matters. We at To The New are experts at ensuring that the transition is secure, safe, and long-lasting—no needless rewrites. If you’re dealing with untidy Jenkins, broken pipelines, or slow onboarding, we’re here to help. Our Certified Devops Engineers ensure a smooth transition and no downtime during migrations.

Want to chat about CI/CD modernization? Reach out to us at https://www.tothenew.com/contact-us. Let’s make Jenkins work for you, not the other way around.