Chatbot vs AI Agent: How QA Ensures Quality in the New Era of AI Solutions

Let’s be honest – we’ve all worked with a bot that was more annoying than helpful. Maybe it repeated the same answer no matter how you phrased your question. Now imagine a system that not only responds, but actually acts: booking your ticket, applying a discount, or adding an event to your calendar.

That’s the difference between a chatbot and an AI agent.

And here’s where QA comes in!

As these systems evolve, testers must go beyond checking scripted responses. We need to ensure that conversational AI is accurate, ethical, and most importantly, human-friendly.

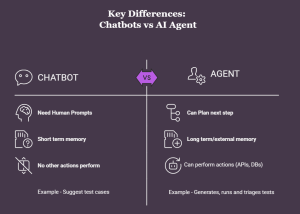

Chatbot vs AI Agent: What’s the Difference?

Chatbots → Handle structured interactions and direct user queries.

AI Agents → Manage complex activities, execute automated processes, understand context, and make decisions.

Differentiating Chatbot Vs Agent

For instance: While testing a website having a chatbot for cloud cost queries, the same intent led to different answers depending on how the user phrased it, like “What’s my AWS bill?” vs. “How much am I spending on cloud this month?”- both mean the same thing, but the bot responded differently.

This is where an AI agent shows its strength. Instead of being confused by wording, it can pull real-time billing data, compare with historical usage, and even suggest cost-saving recommendations.

For QA, this means testing is no longer about “Did it answer?” but “Did it understand and act correctly, consistently, and fairly?”

The Evolution of Conversational AI:

Originally, conversational AI was mostly simple chatbots: answering FAQs or guiding users through fixed flows. With advances in machine learning and natural language processing, we now have AI agents that can:

- Understand context

- Learn from interactions

- Handle multi-step processes

For QA, the shift is clear:

Chatbot testing → “Does it give the correct scripted response?”

Agent testing → “Is it making the right decisions in complex workflows, in real time, and without bias?”

Industry Insight: According to Gartner, by 2027 chatbots will be the primary customer service channel for 25% of organizations. At the same time, studies show chatbot error rates can range from 20–30% when users phrase questions differently, highlighting why rigorous QA is critical.

Business Applications

Chatbots → Best for simple, predictable, and repetitive tasks: FAQs, bookings, password resets.

AI Agents → Best for complex tasks: managing end-to-end workflows, back-office automation, proactive recommendations.

Both improve efficiency, but the QA approach is very different:

Chatbots → Flow coverage testing (about 30% of a typical checklist).

AI Agents → Validating autonomous operations, integrations, and ethical behavior in complex environments.

QA’s Role in Ensuring Quality:

What Does “Quality” Mean Here?

In AI systems, quality is about being accurate, relevant, reliable, and fair.

- Chatbots → Accuracy of scripted flows, low error rate.

- AI Agents → Fairness, privacy, and consistent performance across expected and unexpected tasks.

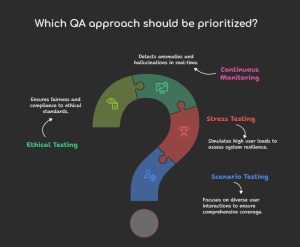

Key QA Approaches That Matters:

1. Scenario Testing → Different user inputs and wording.

AI systems need to account for the myriad ways users can articulate their request.

For example, the user might ask-

“What’s my policy status? ”

“Is my insurance in-force? ”

“Am I covered right now? ”

and they’re all three asking the same underlying question.

Quality assurance must ensure the AI system understands the user’s intent, regardless of phrasing. This means a variety of testing scenarios will be outlined and will include different synonyms, abbreviations, slang, misspelled words, etc. and language variants.

QA approaches that matters

2. Stress Testing → High user load scenarios.

AI solutions should have reliable scaling capabilities. Testers will simulate several thousand concurrent queries to see if the system is able to respond in real-time, without excessive delay, or errors, or crashes.

For chatbots, answering FAQs related to the same client by hundreds of users simultaneously would be a high load test.

For AI agents, handling complex workflows largely simultaneously for multiple users is a high load test scenario.

The overall goal is to ensure the availability of the system and the quality of the responses while under load.

3. Continuous monitoring → Identifying hallucinations and anomalies.

AI behavior doesn’t end at deployment; it evolves.

Testers will need to establish monitoring to capture live interactions to protect against systems generating false information (“hallucinations”) or potentially diverging from expected behaviour. This would require dashboards, anomaly detection, and feedback mechanisms to flag unusual outputs for follow up.

Ongoing monitoring identifies real world failures early and protects user trust and brand reputation.

4. Ethical testing → Assuring fairness, compliance, and non-bias, AI has to not only be functional – it has to be ethical.

QA should also be testing for bias (i.e., does an insurance agent recommend different policies for the same profile based on gender or age?). It must also be compliant to privacy and data regulations. In addition to accuracy, QA assesses if the system is transparent, explainable, and fair across a plethora of user groups.

Ethical testing protects both users but also the credibility of the organization.

Distinguishing Chatbots from AIs : Real Time Scenario:

A gap was discovered when testing a financial chatbot. When the question was:

“How much money is in my account?” – The system gave the right number.

“How much funds do I already have in total?” – A fallback error message was returned.

Both inquiries seemed to have the same purpose from the user’s point of view, yet the chatbot gave contradictory answers.

This demonstrated the necessity of handling duplicates to offer additional context cover.

An intelligent AI used in the same context, however, showed more potential. It could:

1. Acknowledge both inquiries as requests for balance.

2. Lookup account details in real time.

3. Give the exact balance.

4. Anticipate the next step by asking “Would you like to view recent transactions as well?”

This showcased the fundamental difference: chatbots respond, while AI act.

Focus in Both Scenarios:

- Chatbot QA – Comprehensive coverage of alternative phrasings, abbreviations, and typographical errors was the main focus of chatbot quality assurance testing. “Balance,” “funds available,” and “how much in account,” for instance, all have to translate to the same flow.

- Assistant QA – Measuring the quality of decision-making became the main focus. This involved confirming that financial data was correctly retrieved, that privacy regulations were followed, and that replies were consistent across various client profiles.

The main conclusion:

Coverage is essential for chatbots.

Fairness and context are crucial for AI.

QA: Manual + Automation Together:

- Automation → Ensures coverage and speed.

- Manual testing → Captures tone, ambiguity, and fairness.

- Future Outlook → A hybrid approach will be the gold standard: automation for scale, humans for depth.

Challenges QA Must Tackle:

- Bias & Ethics → AI may replicate biases in training data. Testers must cover diverse scenarios.

- Scalability → Manual testing alone isn’t enough; AI-driven QA tools are becoming essential.

- User Experience → QA is not just about correctness. It’s about whether the user feels understood, helped, and satisfied.

A Forrester research study shows that 73% of customers expect AI systems to understand their unique needs, a high bar for QA to validate.

Final Reflection:

For testers, the rise of AI agents is not a threat; it’s an opportunity.

We’re no longer only asking, “Does this button work?”

Instead, we’re asking, “Is this AI making the right decision for the user?”

That’s both exciting and challenging, and it’s exactly why QA will remain at the heart of building trust in AI systems. Because at the end of the day, it’s not just about creating smart systems.

It’s about creating systems that people can truly trust.

Happy testing:)