Understanding Model Context Protocol (MCP): Incorporation of AI tools

Introduction

This is a world of fast-changing AI development, one of our significant pain points is tool and service integration with our AI assistants. If we have had any experience with tools of this nature like LangChain or LangGraph, chances are we have had to suffer this pain of having had to write custom code with every new tool with which we need to integrate. That’s where Model Context Protocol (MCP) is making a difference —that’s rewriting tool integration with AI work in a way that’s a lot easier and more flexible.

Think of MCP as your “USB port of AI applications” – an universal means of applications providing context to Large Language Models (LLMs), regardless of the underlying tools or services.

The Issue: Integration Difficulty of Tools

Let’s stop here before we dive into MCP in order to mention some of the challenges we experience in building AI applications today.

Standard Web Architecture vs AI Plug-in Integration

With traditional web development, we have this familiar scenario:

Client (Browser) → HTTPS/HHTP Protocol → Server → Database/Services

When we move to a page like www.example.com, our browser is communicating with the server using the HTTP or HTTPS protocol. Server processes commands like GET, POST, PUT, etc. and returns with JSON data using REST APIs.

This universal method is effective because:

- Everybody uses same protocol (HTTP/HTTPS)

- REST APIs offer a universal, standardized vocabulary

- Simple integration with any product conformant with these standards

The Challenge of AI Tool Integration

However, when it comes to AI assistants and LLMs, the story is different:

AI Assistant → Custom Integration Code → Tool 1

AI Assistant → Custom Integration Code → Tool 2

AI Assistant → Custom Integration Code → Tool 3

…and so on

The Issues:

- Custom Code for each Tool: Each tool requires custom integration code

- Maintenance Nightmares: Updates from tool providers require code changes

- Scalability Issues: Adding 100+ tools becomes unmanageable

- Broken Ecosystem: There is no standard way of adding tools with AI systems. You need to create a custom driver simply to join any USB device you plug into your computer — same issue developers have today in joining tools with AI.

Step in Model Context Protocol (MCP)

MCP resolves such problems through deploying a universal protocol that falls between your AI application as well as third-party tools/services.

What is MCP?

Model Context Protocol, or MCP, is an opensource protocol that standardizes how applications provides context to LLMs. It can be described as a universal translator between any tools, any services, any sources of data, your AI assistant.

Major benefits

- Standardization: One protocol to rule them all

- Less Development Time: No custom integration code is required

- Automatic Updates: Tool providers handle updates on their end

- Scalability: Connect hundreds of tools effortlessly

- Maintainability: Clean, manageable codebase

MCP Architecture: The Three Pillars

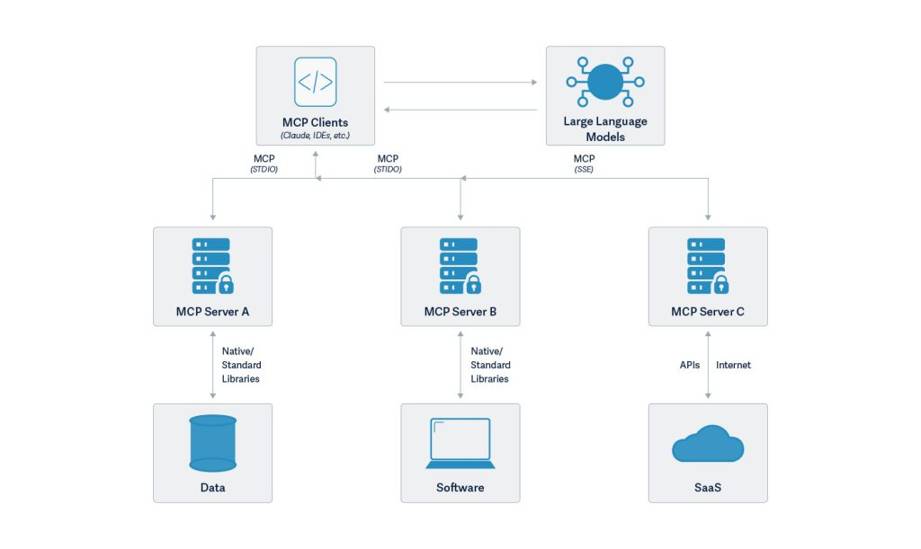

There are three core components of MCP architecture:

1. MCP Host

MCP Host is your highest-level application environment in which MCP integration is being used. Examples:

-IDEs (VS Code, Cursor)

-Desktop applications (Claude Desktop)

-Custom apps (built with Streamlit, FastAPI, etc.)

2. Client MCP

MCP Client is installed in MCP Host and is responsible for communication with MCP servers. It acts as the middleman that:

-Finds available tools

-Sends messages to applicable servers

-Handles protocol communication

3. MCP Server

The MCP Server is in contact with real tools and services. Each server may connect to:

-Databases

-APIs

-Code repositories

-File systems

-External services (Google search, wikipedia etc.)

MCP Communication Flow

MCP Flow

User Query:User asks a question or makes a request

Tool Discovery: MCP Host queries all connected MCP servers to get available tools

LLM Consultation: Host sends the question along with available tools to the LLM

Selection of Tools: LLM analyzes and decides which tools are needed

Tool Run: MCP Client calls out the selected tools through their MCP servers

Context Integration: Tool responses are collected and sent back to the LLM

Final Answer: LLM generates the final response based on the aggregated context

MCP vs. Standard Integration

| Aspect | Classic Integration | MCP Integration |

| Setup Complexity | High – Code per tool per person | Low – Standard procedure |

| Maintenance | High – Manual updates | Low – Automatic updates |

| Scalability | Limited – Linear increase in complexity | Good – Gradual complexity |

| Code Quality | Broken – Mixed patterns of integration | Clean – Standardized method |

| Time to Market | Slow – Custom-made development | Rapid – Plug-and-play |

Future of MCP

The Model Context Protocol (MCP) marks a major shift in how we build AI applications. As more tool providers adopt MCP and more apps start using it, we can look forward to:

Ecosystem Growth: A constantly growing collection of tools moving towards MCP-compatibility

Better Standards: Continuous refinement of the protocol itself

Improved Performance: More efficient and optimized communication between tools and AI models

Broader Adoption: Support and integration across major AI platforms

Conclusion

More than a technical specification, Model Context Protocol is a step towards a wiser, more interconnected, scalably AI world. By setting up a universal means by which AI apps can communicate with external tools, MCP removes the hard, mechanistic work long plaguing AI development.

Whether it’s building a simple chatbot or some kind of advanced AI-based solution, MCP allows you to connect into hundreds of tools — without designing hundreds of one-off integrations. In the future, MCP will be as core to AI construction as REST APIs have been to the web.