Beginner’s Guide to ECS

- AWS, Cloud, DevOps, Technology

Introduction

Amazon ECS (Elastic Container Service) is a highly scalable & fully managed container orchestration service that allows us to easily run, manage and scale containerized applications on AWS.

With ECS, it’s not required to manually install or operate any container orchestration software, or even to schedule containers on a set of computing machines.

Also, there’s a concept of ECS Capacity Providers which is configured to provide the resources/infrastructure that will be used by the workloads run within the cluster. In a cluster, it’s possible to have multiple capacity providers and an optional default capacity provider strategy.

The capacity provider strategy determines how the workloads are spread across the cluster’s capacity providers. We can opt for the strategy depending on our requirements when we run any task. There are some different types of capacity providers as well, depending on whether we’ve our workloads hosted on EC2 or Fargate.

Problem Statement

Running containers without using any container orchestration service would lead us to manage a lot of extra tasks such as:

- Configuring Auto Scaling of containers would have to be managed externally.

- It’s highly possible that we might be deprived of some very good services provided by managed cloud providers such as the ability to use serverless services (like Fargate by AWS ECS).

- Also, we’ll have to set up alternatives related to the integration with log configuration systems, and file systems such as NFS/EFS.

Solution Approach

In our case, since most of the client’s infrastructure was already running over AWS, therefore, we opted for ECS as the container orchestrator which is a proprietary technology provided by AWS.

One of the primary reasons for choosing ECS is its tight integrations with a variety of other AWS Services such as Dynamic Port Mapping, CloudWatch, EFS, etc.

In ECS, we’re provided with 2 different launch type options from AWS, that goes as follows:

- Fargate: A serverless compute engine, with us not required to manage servers.

- EC2: Self-managed infrastructure using Amazon EC2 Instances.

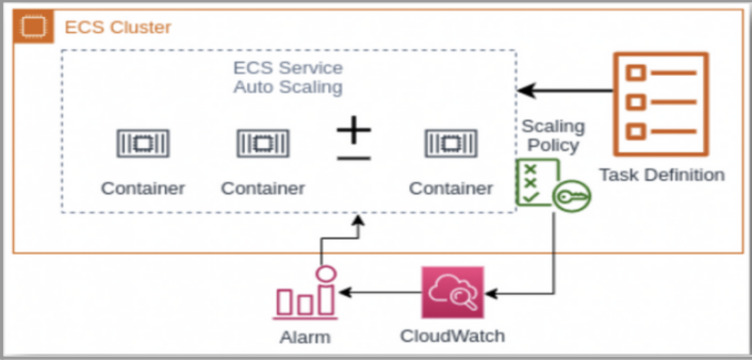

With Auto-Scaling, the main benefit is that it eliminates the need for us to continuously monitor & respond to real-time traffic spikes, & rather, this workload is performed automatically using the CloudWatch service that further leads to the creation of new resources.

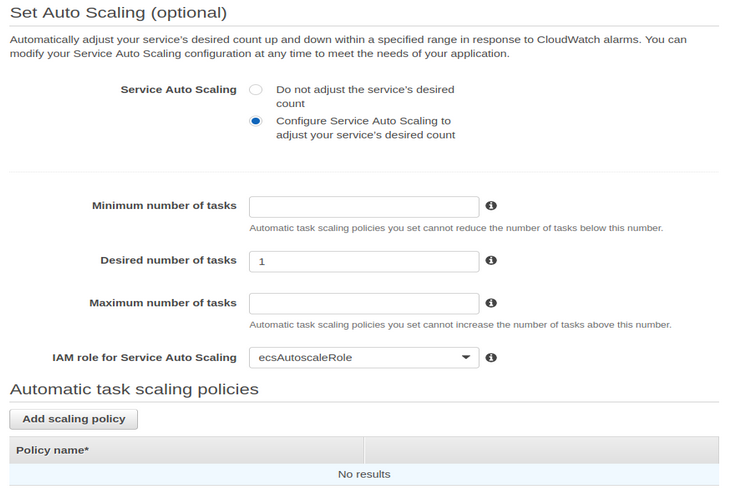

The 3 values that we need to provide the value in ASG are Minimum, Desired, and a Maximum number of tasks, and then, it’s required to create a Scaling Policy. (It defines what action to take when the associated CloudWatch alarm is in ALARM state, or in reference to metric(s) being monitored)

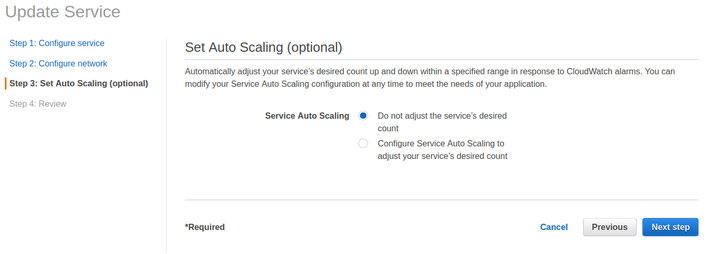

Auto-Scaling is an optional feature that comes with ECS Service and can be enabled even while modifying the already existing service.

Step by Step Procedure:

Under Automatic task scaling policies, when we click on “Add Scaling Policy”, we get 2 types as mentioned below:

- Target Tracking

- Step Scaling

Target Tracking:

With target tracking scaling, we select a scaling metric and set a target value. CloudWatch alarms that are associated with the target tracking scaling policies are managed by AWS and deleted automatically when no longer needed, so, we’re not managing the alarms, in this case.

We get the option to be monitoring among 3 service metrics during its configuration, which goes as follows:

- ECSServiceAverageCPUUtilization

- ECSServiceAverageMemoryUtilization

- ALBRequestCountPerTarget

Under this, we would be required to provide the Target Value for the metric when scaling action would get triggered.

Also, we get 2 types of cooldown periods to be configured here:

- Scale-Out Cooldown Period (The number of seconds in b/w 2 scale-out activities)

- Scale-In Cooldown Period (The number of seconds in b/w 2 scale-in activities)

However, it’s also possible to “Disable Scale-In” action, with which this policy would never be used for scale-in within the ASG.

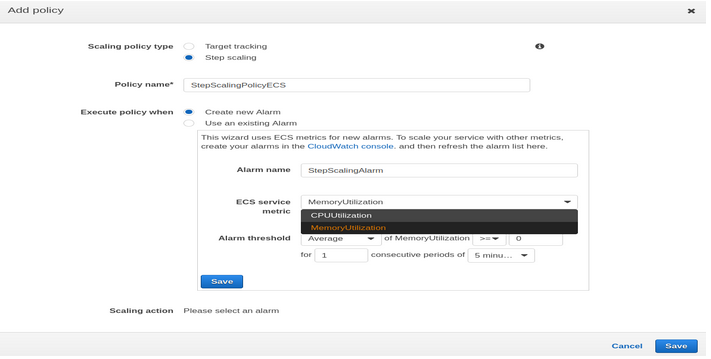

Step Scaling:

Step scaling is one of the dynamic scaling options available for us to use & requires us to create CloudWatch alarms for the policy, however, we can also use existing alarms if any.

On the ECS Console, we can get a new alarm created by choosing the ECS service metric option to be either “CPUUtilization” or “MemoryUtilization”.

To scale the ECS service with other metrics, we can create our own alarms via the CloudWatch Console.

And finally, the alarm threshold can be set, depending on the application traffic load that can be handled by a single container/task, for which load testing via the Apache Jmeter tool is recommended.

Debugging:

Initially, there were a few cases when CloudWatch Alarms were not configured appropriately due to which containers were not scaling in the desired manner.

Apart from this, as per our architecture, it was required to know, when the FileSystem mounting is going to actually take place within the container among the multiple steps that are involved, so this was surely something that we’d to look over.

Conclusion:

Hence, the powerful simplicity of Amazon ECS enables us to grow from a single Docker container to managing an entire enterprise application portfolio. It’s recommended to take advantage of the Auto Scaling option provided under ECS, which makes the application infrastructure to be highly automated.