Java Project Loom – Virtual Threads (Part 1)

This JEP No 425 is something I am waiting for with bated breath. It’s a new notion that has been added to the concurrent APIs of JDK 19. It’s in the preview stage, and soon it will be a permanent feature in JDK in the coming few releases.

Virtual Threads are light weight that reduce the effort of writing, maintaining and observing high throughput applications.

In this part, we will see the goals of this JEP.

The first one is to enable server applications written in the simple thread-per-request style to scale with near-optimal hardware utilisation.

Let’s understand the “thread per request” model. Well, for an HTTP server, it means that each HTTP request is handled by its own thread. For a relational database server, it means that each SQL transaction is also handled by its own thread. Simple stuff.

One request = one transaction = one thread.

What is the cost of this model? Well, to understand this cost, we need to understand the cost of a thread in Java. A Java Thread, as created in the very early versions of Java is a thin wrapper on a platform thread, also called Operating System Thread.

Now, some mathematical stuff coming underway. Take a calculator by your side.

There are two things we need to know about them. First, a platform thread needs to store its call stack in memory. For that, 20MB are reserved upfront in memory. Second, it is a system resource. It takes about 1 ms to launch a platform thread. So, 20MB of memory, 1ms to launch. A platform thread is in fact a rather expensive resource.

How can we optimise hardware utilisation with such threads?

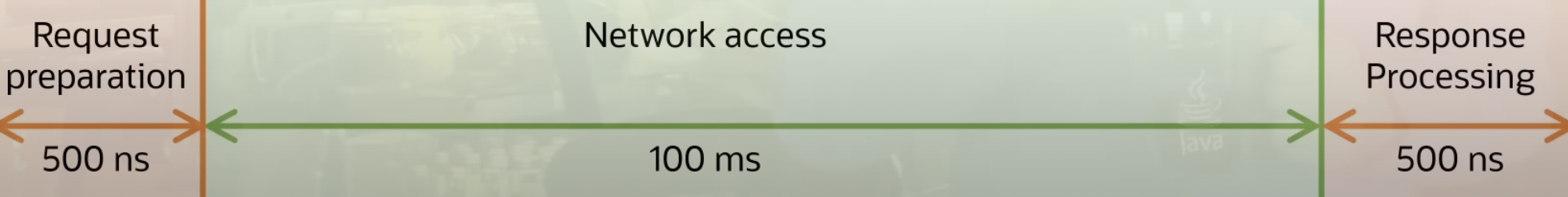

Suppose we have 16GB of memory available for your application. Divided by 20MB for a thread, you have room for 800 threads(16 * 1000 / 20) on such a machine. Suppose these threads are doing some I/O, like accessing resources on a network. And suppose that this resource is accessed in 100 milliseconds. Preparing the request and processing the response will be done in the order of 500 nanoseconds each(as seen above.). Suppose all this in-memory computations are taking 1000 nanoseconds. Now from figure, since 1ms = 1000,000 nanoseconds, it means that there is a factor in the order of 100,000 between the preparation of the request and the processing of the response. During this, our thread is sitting there, doing nothing. Shelling thousands of dollars on CPU to make it sit idle. What a pity?

So if we have 800 such threads, our CPU will used at 0.8%. Less than 1%. And if we double the memory to 32GB, it will be used at 1.3%. If we want a CPU usage of 90%, then we need 90,000 such threads. Launching them will take 90s, that is 1 minute and a half, and they will consume 1.8 TB of memory. If such RAM is available on market, I am pretty sure, only few people can afford it. So clearly, platform threads are far too expensive to scale with near-optimal hardware utilisation. Project Loom is addressing this problem.

The second goal is to enable the existing code built on the classical Java Threads to adopt Virtual Threads with minimal changes. This goal is also quite ambitious, because it means that everything you could do with classical Threads, you should be able to do it in the same way with virtual Threads. That covers several key points:

First, virtual thread can run any Java code or any native code.

Second, you do not need to learn any new concepts.

Third, but you need to unlearn certain ideas. Virtual threads are cheap, about 1000 times cheaper than classical platform threads, so trying to avoid blocking a virtual thread is useless. Writing classical blocking code is OK. And that’s a good news, because blocking code is so much easier to write than asynchronous code.

Is it a good idea to pool virtual threads? Answer is a big no-no, since its cheap, no need to pool them, create and throw on demand.

Two more good news with virtual threads First, thread local variables also work in the same way. And second, synchronization also works. Now several things needs to be said about synchronization. A virtual thread is still running on top of a platform thread. There is still a platform thread underneath. The trick is, this virtual thread can be detached from its platform thread so that this platform thread can run another virtual thread. When would it be detached? Well, a virtual thread can be detached from its platform thread as soon as it is blocking. It could be blocked on an I/O operation, or on a synchronization operation, or when it is put to sleep.

One caveat, if a virtual thread is executing some code inside a synchronized block, it cannot be detached from its platform thread. So during the time it is running the synchronized block of code, it blocks a platform thread. If this time is short that’s ok, there is no need to panic. If this time is long, that is, if it’s doing some long I/O operation, then it’s not a great situation, we may need to do something. We need to replace synchorized block with Reentrant Lock APIs. This problem with synchronized blocks may be solved in the future, in fact it may be solved by the time virtual threads become a final feature of the JDK.

Reactive programming is also available on the market and is also trying to solve this problem. But the problem with reactive is that it’s a complete paradigm shift in the way we code. It’s hard to learn, hard to understand, hard to profile the code, even harder to debug, and a nightmare to write test cases.

Hope you have understand the problems this Project Loom is trying to solve. In coming parts, we will look into some coding. I am excited about this project. Let me know about your reaction in comments.

This blog is originally published in Medium, read here.