Drupal AI Chatbot with Custom Data

Drupal chatbots powered by AI use Large Language Models (LLMs) to understand user queries and provide intelligent, context-aware responses. These models rely on transformer-based neural networks, which allow the chatbot to interpret questions, generate relevant answers, and maintain a natural conversational flow. By integrating LLaMA3 via Ollama and connecting it to a vector database, your Drupal chatbot can offer smarter search, handle complex queries, and deliver a more interactive experience for users.

LLMs (Large Language Models)

- LLMs are AI models trained on huge text data to understand and generate human-like language using advanced neural networks called transformers.

- They use deep neural networks called transformers to predict the next word or token in a sentence, enabling coherent and context-aware text generation, etc.

Why Use Ollama and LLaMA3 On-Premises or On Cloud

Using Ollama with the LLaMA3 model on-premises or on cloud offers several advantages:

- Privacy and Control: Sensitive data remains under your supervision, avoiding third-party API exposure.

- Cost Efficiency: You avoid recurring API costs while leveraging powerful AI capabilities.

- Faster Iteration: On-premises/cloud deployment allows rapid experimentation and fine-tuning without relying on network speed or cloud latency.

- Customisable Development: Easily modify prompts, integrate with existing systems, and test multiple AI models without restrictions.

Vector DB

A Vector Database (Vector DB) is optimised to store, index, and search high-dimensional vector data used in AI applications.

- Vectors represent complex data like text, images, or audio in numerical form for similarity and nearest-neighbor searches.

- Embeddings are generated by AI models to convert raw data into fixed-length vectors capturing semantic meaning.

- Milvus is a leading open-source vector database designed for efficient and scalable similarity search on large datasets.

- Running Milvus on-premises or on cloud allows faster development, testing, and secure AI model experimentation before production deployment.

Prerequisites for Drupal AI Chatbot Setup

Step 1: Download Ollama

Download and install Ollama based on your operating system:

- MacOS: Ollama for Mac

- Ubuntu/Linux: Ollama for Linux

- Windows: Ollama for Windows

Step 2: Start Ollama

- Open your terminal or command prompt.

- Navigate to the directory where Ollama is installed.

- Start Ollama by running:

ollama –version - Default Ollama URL (API endpoint): localhost:11434/

Step 3: Pull AI Models

Pull the necessary models for chat and embedding functionality:

- LLaMA3 model for chat:

ollama pull llama3 - Model for generating embeddings:

ollama pull nomic-embed-text

Step 4: Run Ollama Chat Example

- Start a chat session with the LLaMA3 model:

ollama run llama3 - Example prompt:

>>> What is the capital of India? - Additional Resources: Ollama Docs

Drupal AI Chatbot Setup

Step 1: Install Drupal

Begin by creating a new Drupal project:

composer create-project drupal/recommended-project chatbotdemo

For beginners, detailed installation instructions are available here: Drupal Installation Guide

Step 2: Install Required Modules

- Run the following composer commands to install the core AI modules, Ollama provider, Milvus provider, and AI search block:

composer require drupal/ai drupal/search_api

composer require drupal/ai_provider_ollama:^1.1@beta drupal/ai_vdb_provider_milvus:^1.1@beta drupal/ai_search_block:^1.0@RC

Step 3: Milvus Installation with DDEV

- Copy the Milvus Docker Compose example:

cp web/modules/contrib/ai_vdb_provider_milvus/docs/docker-compose-examples/ddev-example.docker-compose.milvus.yaml .ddev/docker-compose.milvus.yaml - Restart DDEV to apply changes:

ddev restart

Step 4: Milvus Database Custom Setup(Ignore for DDev/Drupal)

- Download and run Milvus standalone using Docker:

docker run -d –name milvus-standalone -p 19530:19530 -p 19121:19121 milvusdb/milvus:latest - Documentation: https://milvus.io/docs

Step 5: Enable Drupal Modules

- Enable the AI Search module; this will also enable dependencies such as Search API, AI Core, and Key modules.

- Enable the Ollama Provider module.

Step 6: Configure Ollama Provider

- Navigate to Drupal Configuration → Provider Settings and set up Ollama provider with your desired configurations.

Ollama Provide Setup

Step 7: Configure Default AI Providers

- Navigate to Drupal Configuration → AI Default Settings and set up Default provider with your desired configurations.

AI Provider Chat

AI Provider

Step 8: Configure Vector DB

- Navigate to Drupal Configuration → Vector DBs Settings -> Milvus Configuration

Vector Provider Setup

Access Milvus DB UI:

Milvus DB UI

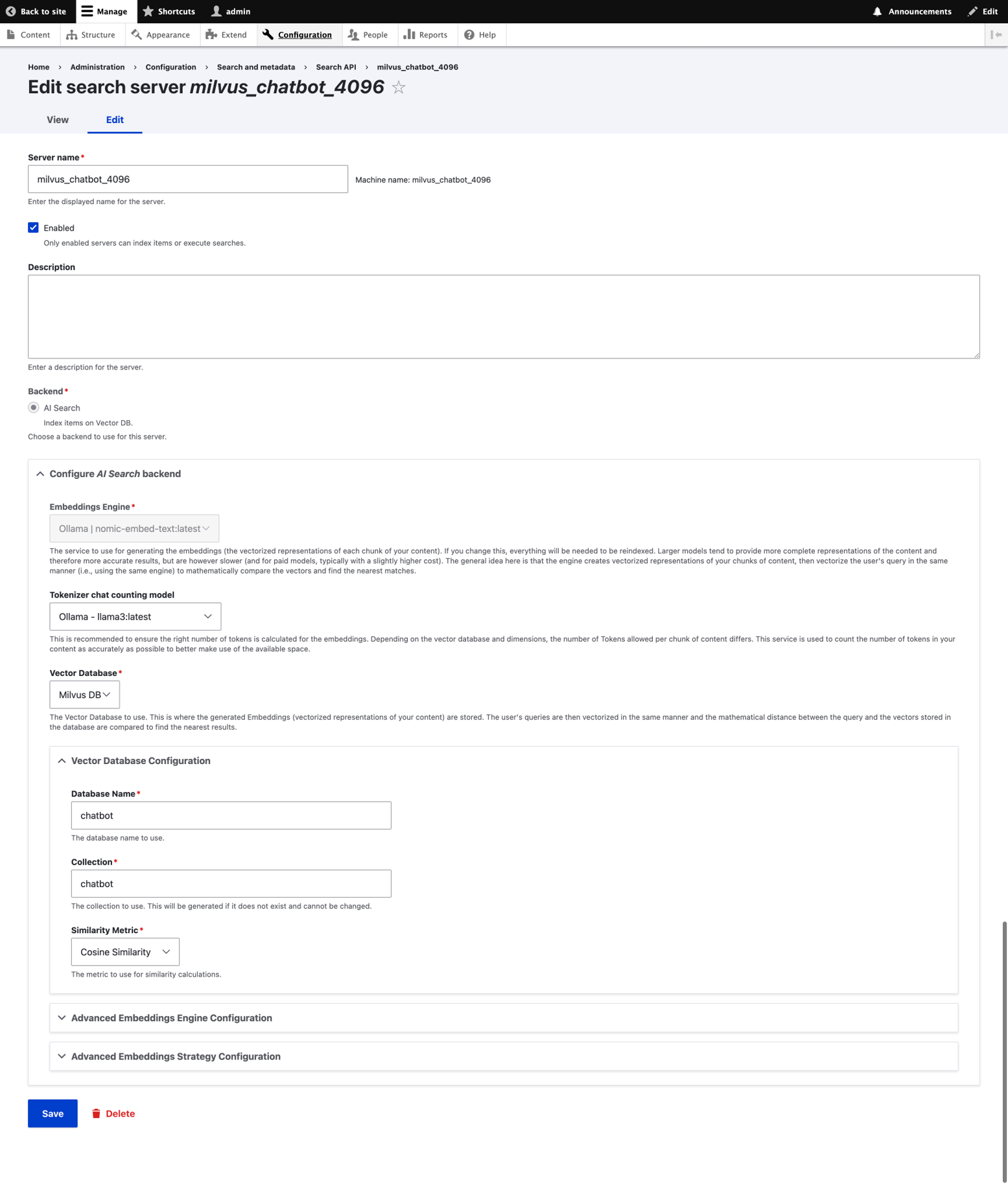

Step 9: Configure Search API

- Navigate to Drupal Configuration → Search API -> Search API: Add Server

Search API Server Setup

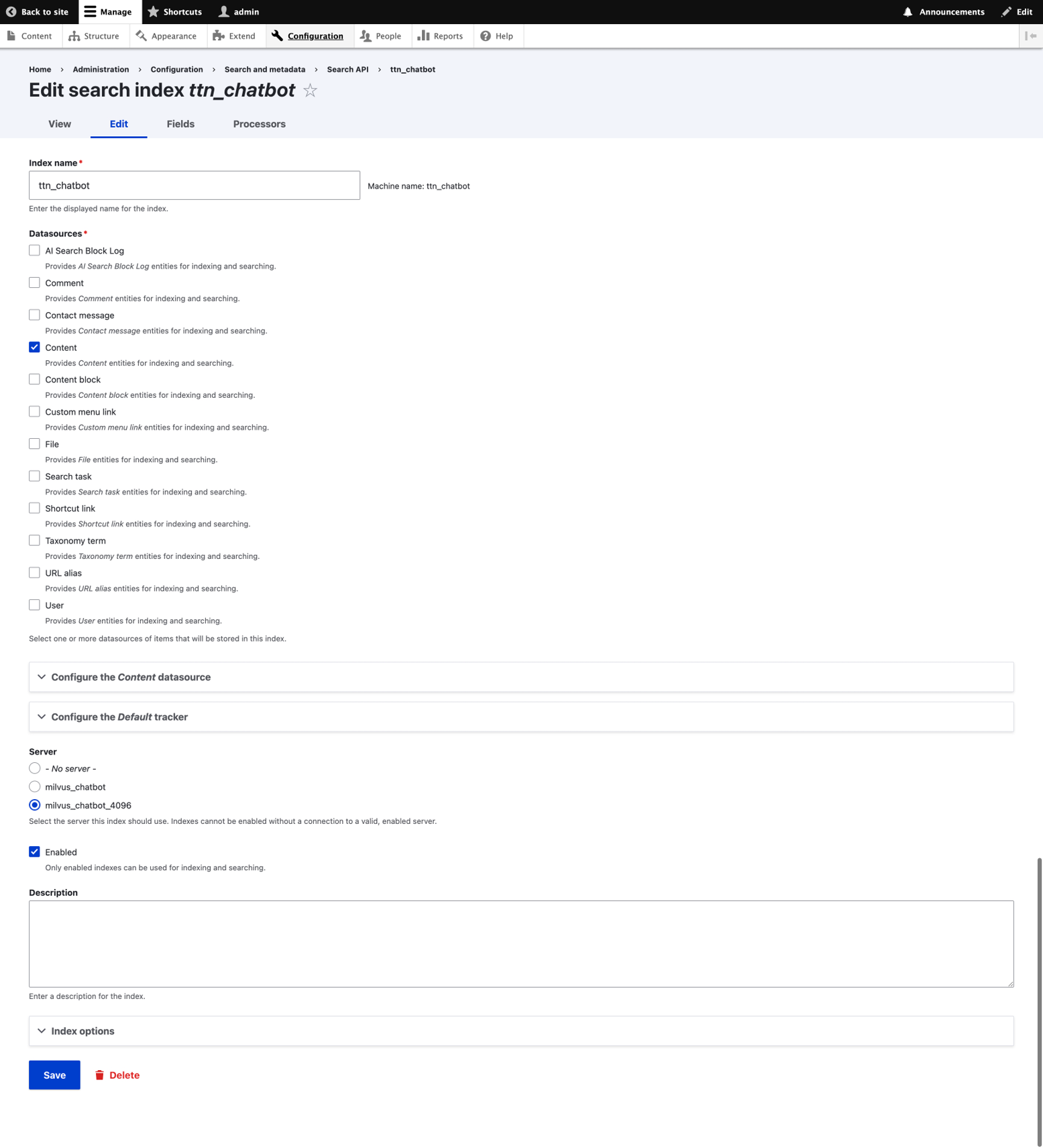

Step 10: Configure Search API

- Navigate to Drupal Configuration → Search API -> Search API: Add Index

Search API Index Setup

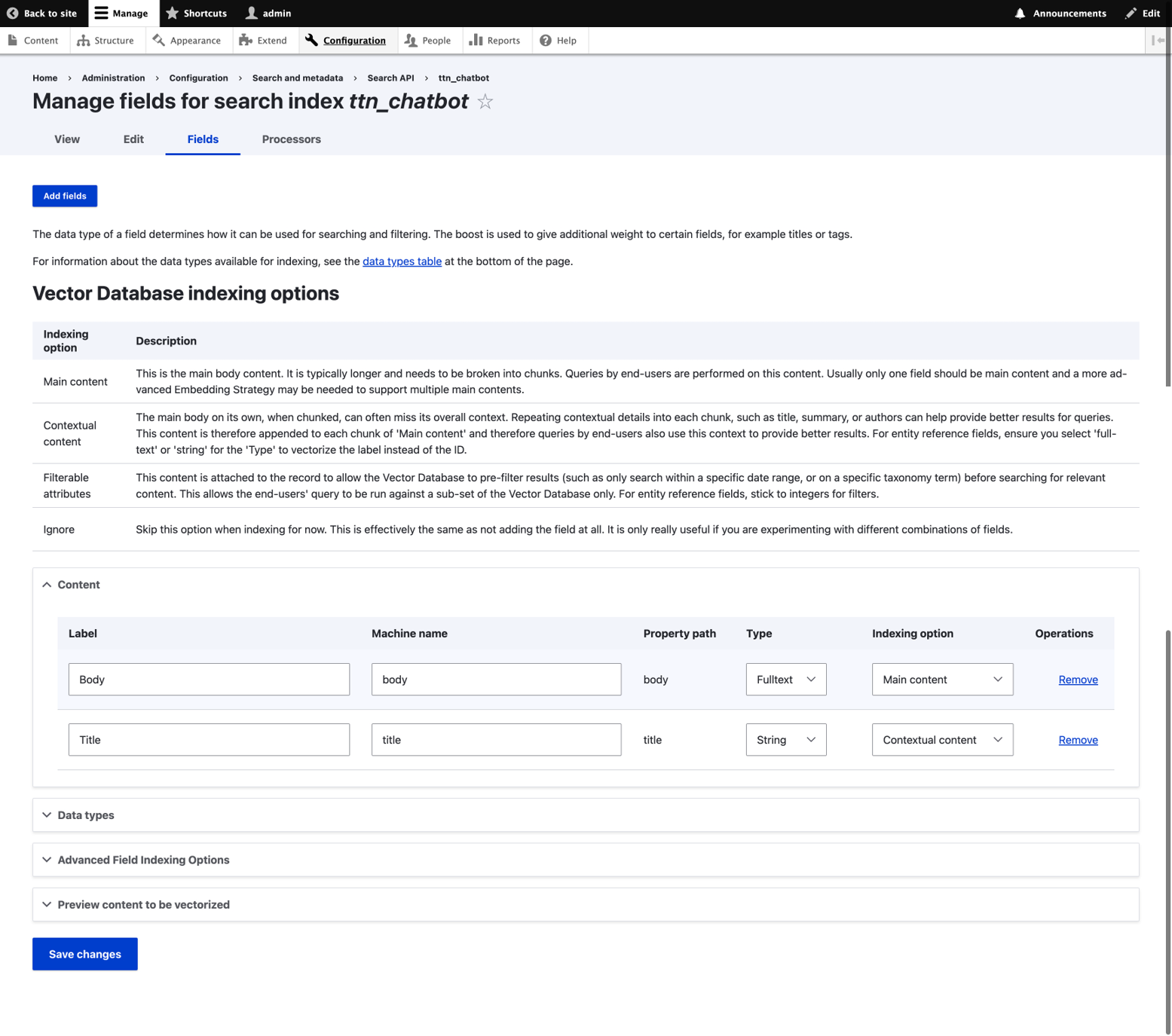

Fields to Index

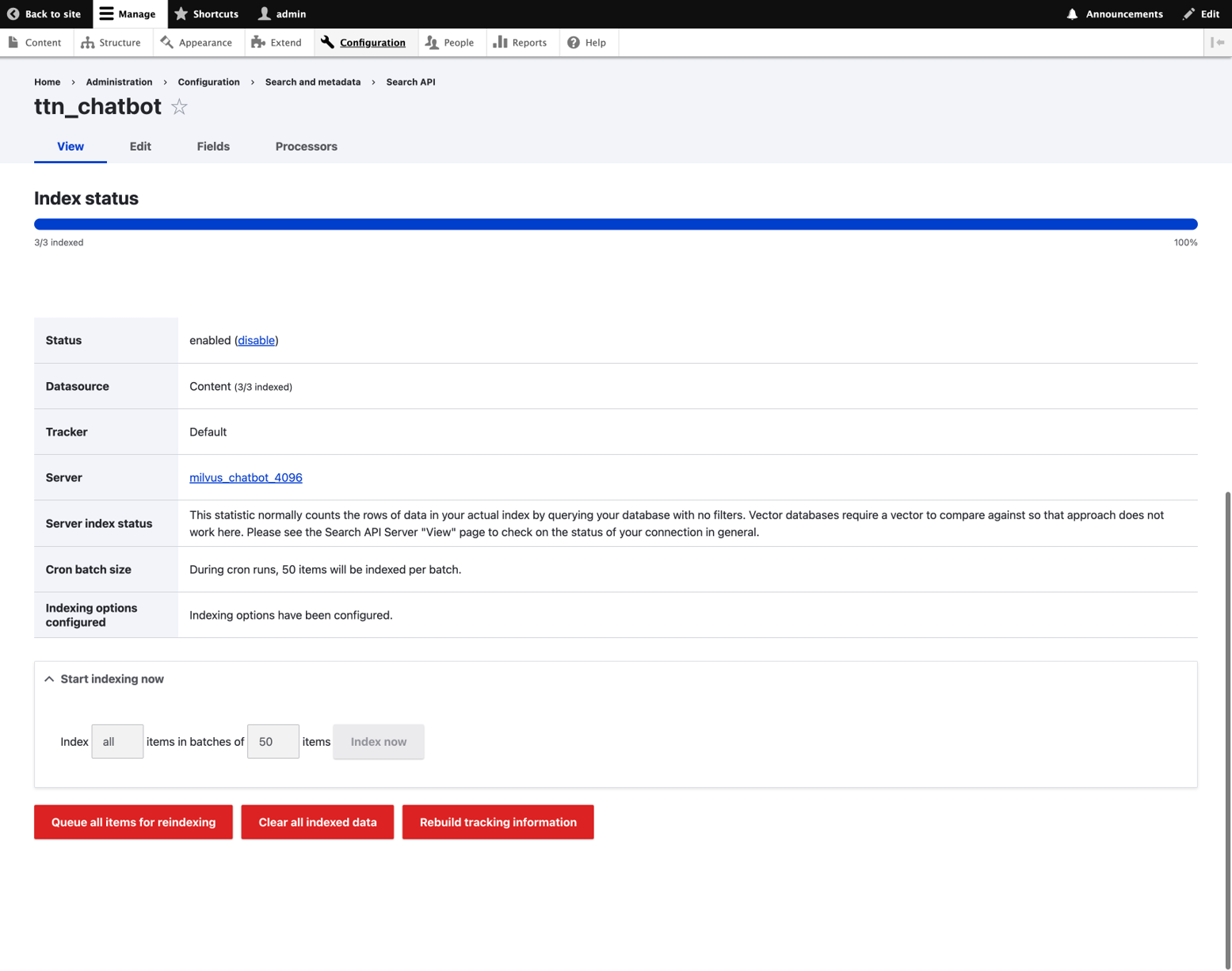

Step 11: Configure Search API – Indexing Data

- Navigate to Drupal Configuration → Search API -> Search API: Click on Index

Search API Index

Step 12: Verify your indexed data in Vector DB(Milvus)

Vector DB – Milvus Indexed Data

Step 13: Configure/Enable AI Search Block

- Navigate to Structure → Block layout

Enable AI Search Block

Working AI Search Block For Your Specific Data

Working AI Search Block For Your Specific Data

Conclusion

By integrating LLMs like LLaMA3 with Ollama, on-premises or in the cloud, and incorporating a Vector Database like Milvus into Drupal, you create a powerful, private, and scalable AI chatbot solution. This configuration not only enhances data privacy and improves cost efficiency, but also allows for greater customization and quicker experimentation—all without the need for cloud services.