Manage Stateful ECS Services using REX-Ray

Introduction

We have been looking for a solution that would help us to mount hundreds of GBs of storage in the ECS tasks spawned by specific ECS services. ECS cluster does provide an option to increase the storage allocated to the tasks, but that applies to all the ECS services running inside the cluster. Sometimes, we need the same warehouse to be available for new ECS tasks.

REX-Ray is an open-source storage management that is built on the libStorage framework. It supports both of our use cases, i.e., it helps us to deploy stateful applications on ECS. This functionality could be extended to various use cases, such as running databases or Jenkins in a containerized environment. It supports multiple docker orchestration tools( Kubernetes, Mesos Frameworks, Docker Swarm, etc.) to automatically orchestrate storage tasks between hosts in the cluster.

Problem Statement

- To manage stateful ECS services to persist and maintain its data after the container’s life cycle has ended.

- To provide additional storage in the ECS tasks where multiple large files are to be downloaded and processed.

Solution Approach

We require additional storage at a specific path inside ECS tasks for a particular ECS service.

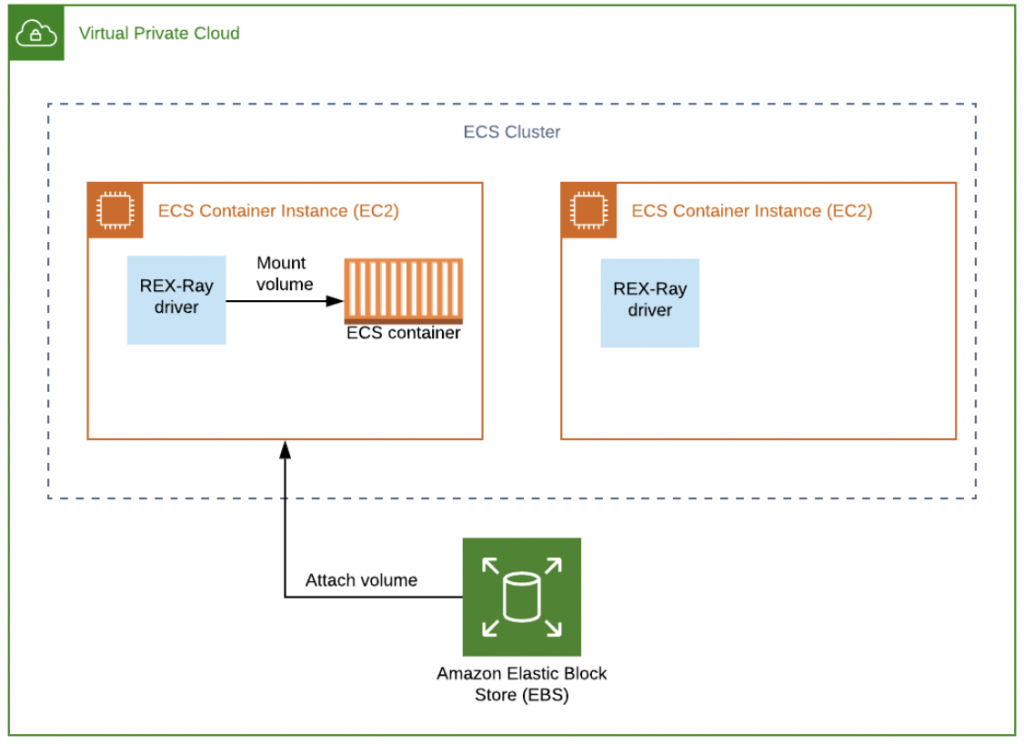

“REX-Ray – a docker volume plugin” provides the capability to use shared storage as a Docker volume. In AWS, it supports EBS to be used as a docker volume in ECS tasks.

Steps to configure the plugin:

- Every EC2 instance running inside an ECS cluster should have the plugin installed.

Add the below command to the user data in the launch template used to launch instances in the ECS cluster.

#docker plugin install rexray/ebs --grant-all-permissions

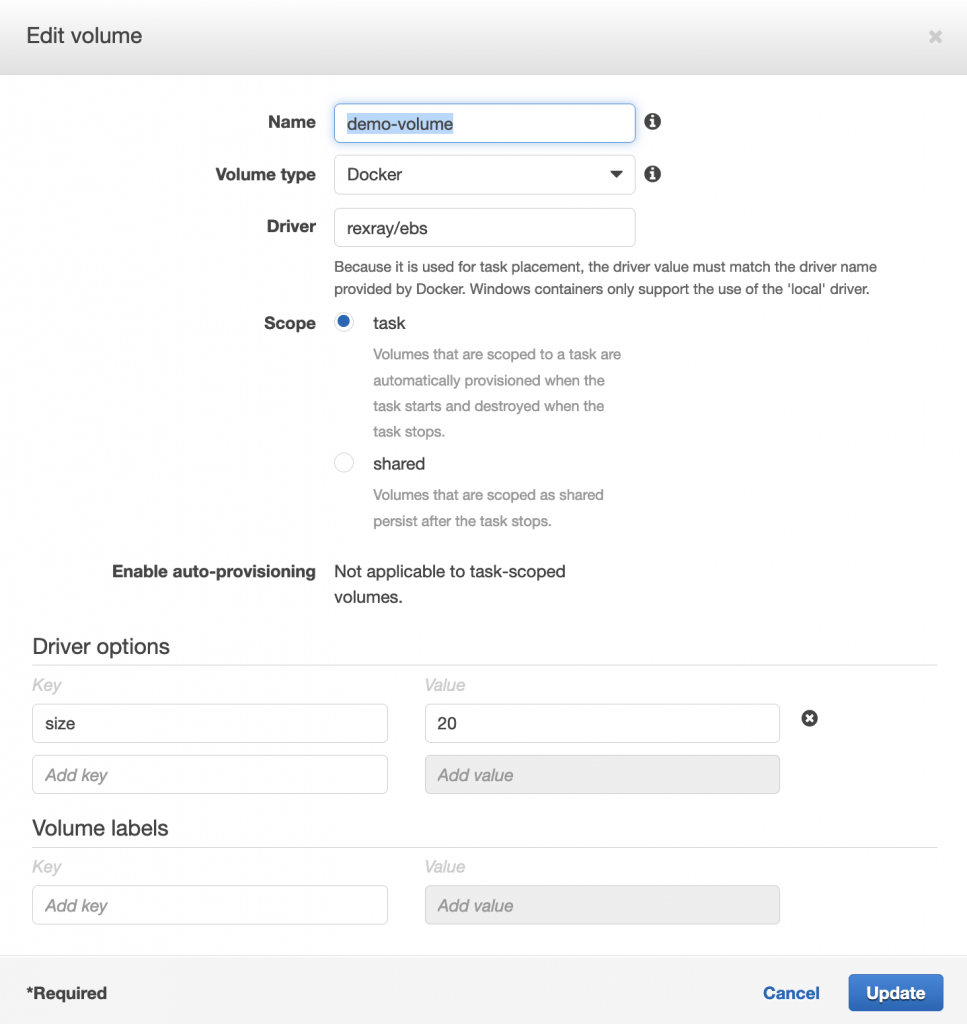

- Configure the task definition to use the REX-Ray plugin as below under “Add Volume” section:

- “Name” – EBS volume will be created using this name. If already created, provide the name of the Volume.

- “Volume type” – Select the volume type as “Docker.”

- “Driver” – Specify the driver name as “rexray/ebs”

- “Scope” – Select “task” if you need a new volume to be created with every new task created under the ECS service. Select “shared” if you need the new task to use the existing volume being used by a previously running task.

While using “shared” mode, please check its behavior while running multiple tasks in single and multiAz environments. - “Enable auto-provisioning”: This option is applicable in “shared” mode. In “shared” mode, you can either use the existing volume or, if the volume is not present, the plugin will create one for you.

- “Driver options”: Under driver options, the volume size can be specified in case of new volume creation.

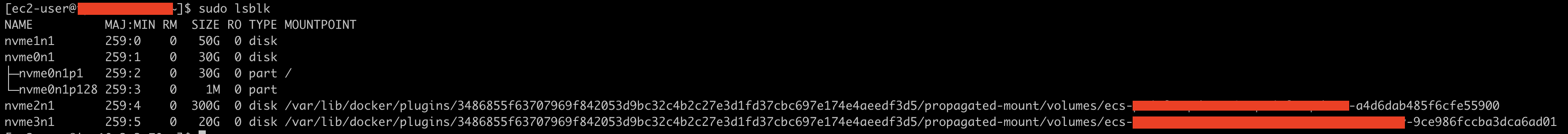

- The next step is to update the service with the new task revision created after the above configuration. Once the service is deployed, you should be able to see similar output on EC2 instance using “lsblk” command:

Debugging

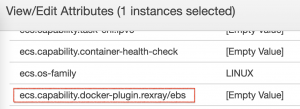

- Verify if the REX-Ray plugin is enabled on the container instance by viewing its attributes. Search for “rexray” in the list, if present, the plugin is enabled.

- The debug flag can be used with any command in order to get the verbose output:

# rexray volume -l debug - Ensure EBS creation permissions are attached to the EC2 instances.

- When “Scope” is selected as “task,” upon the termination of the ECS tasks, rexray plugin does not delete the detached volumes.

More Use Cases:

We have been using this plugin for over 3 years, and it is working as expected.

Other use cases where we are using it are:

- Running Ldap in ECS and maintaining the configuration in EBS volumes using the REX-Ray plugin in “shared” mode.

- Running Nexus as an ECS service while maintaining configuration in EBS volume using this plugin.

- REX-Ray supports multiple Operating Systems and Storage Providers and can be used in numerous ways as per the requirement. Reference Link: https://rexray.readthedocs.io/en/stable/