Networking layer with Combine

What is Combine?

The Combine Framework – A framework rolled back in 2019 that works on the fundament of Functional Reactive Programming (FRP) paradigm similar to RxSwift and ReactiveSwift. It is developed by Apple and can be seen as a 1st party alternative to RxSwift and ReactiveSwift.

Let’s read from the documentation:

Customize handling of asynchronous events by combining event-processing operators.

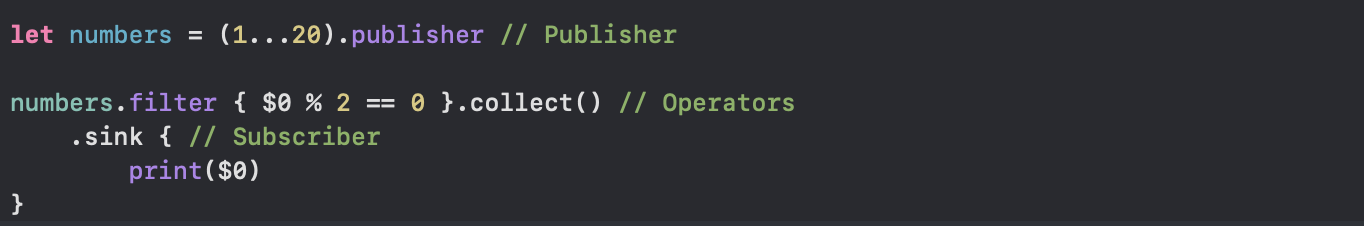

This article takes liberty assuming that we have the basic understanding of the three key moving pieces in Combine which are publishers, operators, subscribers, and how we can create a Combine Pipeline.

In this post, we’ll talk about the implementation of the networking layer using Combine. While in the implementation we will touch on some topics like Protocols, Generics, Associative Protocols, and Of Course Combine’s flavor of URLSession

Before we jump into writing the Networking Layer using Combine, let’s revisit the “Call back” type network layer we generally have been implementing in our code.

In this networking layer, we have defined a few protocols that define our API abstraction. To highlight the Combine’s way of networking layer, It will be more related if we refactor the “Call back” (closure) type network layer. On a refactoring adventure like this, it’s a good idea to start by changing the protocols first and update the implementations later.

Link to CallBack Type Request Protocol

Before we update the protocol let’s spend some time understanding these Protocols and their purpose. As mentioned we’ll brush up on a few advanced swift topics like Protocols, Generics, and Associative Protocols.

As we see in the code above there are many protocols, so do we really need them?? Well honestly no we don’t but we should if we want our app and the API to be decoupled, which in turn will allow us to mock it easily for testing.

Let me say it loud: Protocols are AWESOME.

Now let’s analyze the protocols in detail:

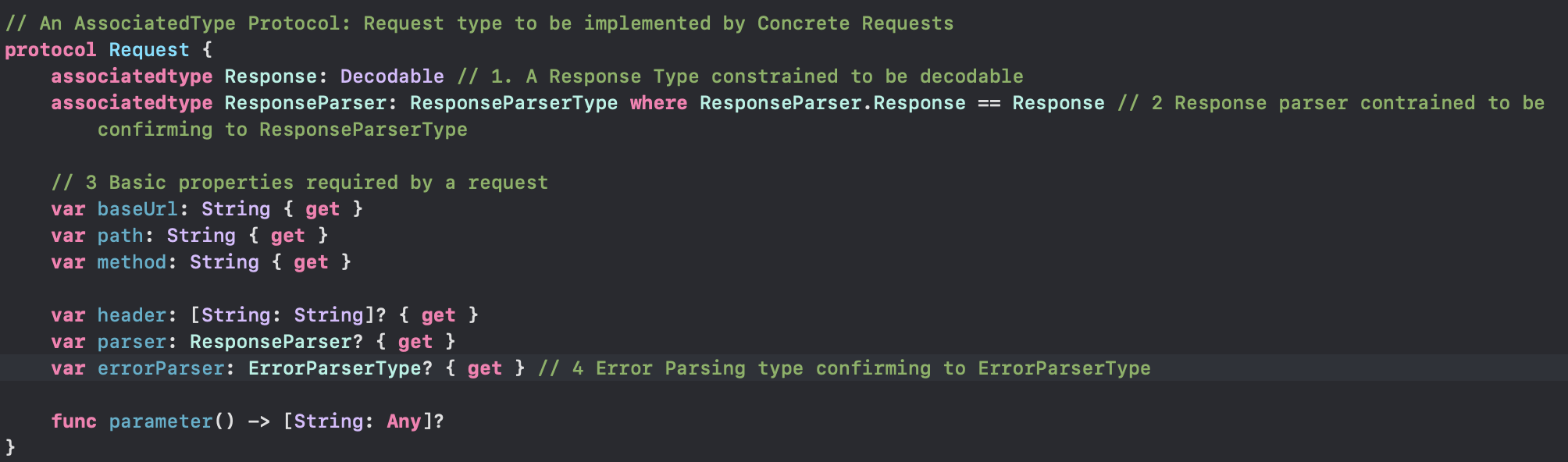

Request :

// An AssociatedType Protocol: Request type to be implemented by Concrete Requests

Link to CallBack Type Request Protocol

This Request type is an associated Type Generic Protocol that defines two associated types to operate on.

- Response type constrained to be decodable

- ResponseParser is constrained to be of ResponseParserType where ResponseParserType is another ATP over Response type. Also, We type constrain ResponseParser to be able to guarantee that Parser is for the correct Response type.

- We have defined a few required properties for the request.

- Next, we have an errorParser of ErrorParserType Protocol Type that allows the Implementing Request type to use a custom error parser over the default one.

That very much describes the Request Protocol that will be used by our app code to declare every request type.

But, this protocol remains as-in. It doesn’t use the callback so we don’t have to convert this to use combine.

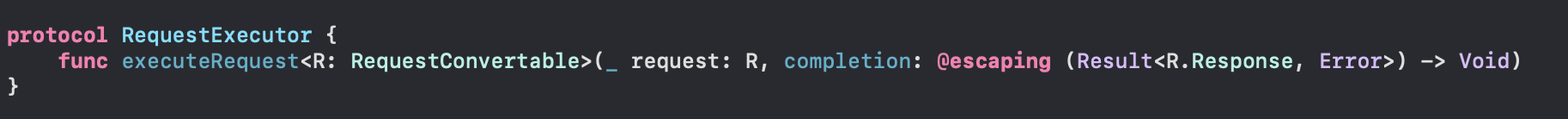

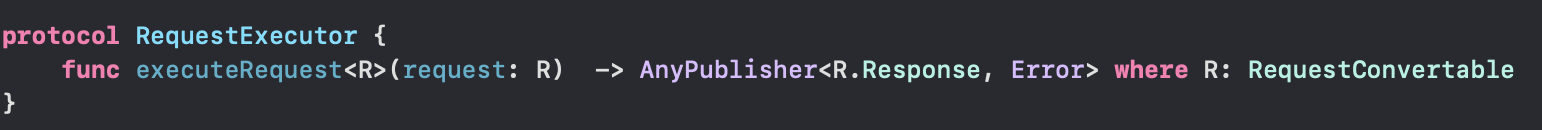

Next, RequestExecutor :

This protocol declares the abstraction for the request executor implementation. This will help us to switch over the API (Framework) making requests.

Mark the use of generic type but let me make this clear that it is just a Protocol with a function that accepts generic requests. It is an implementation of type erasure concept that allows the use of the Generic Protocol as type.

And This is not an ATP (associated type Protocol)

That will pretty much make our Networking API. Let’s just see the implementation of RequestExecutor.

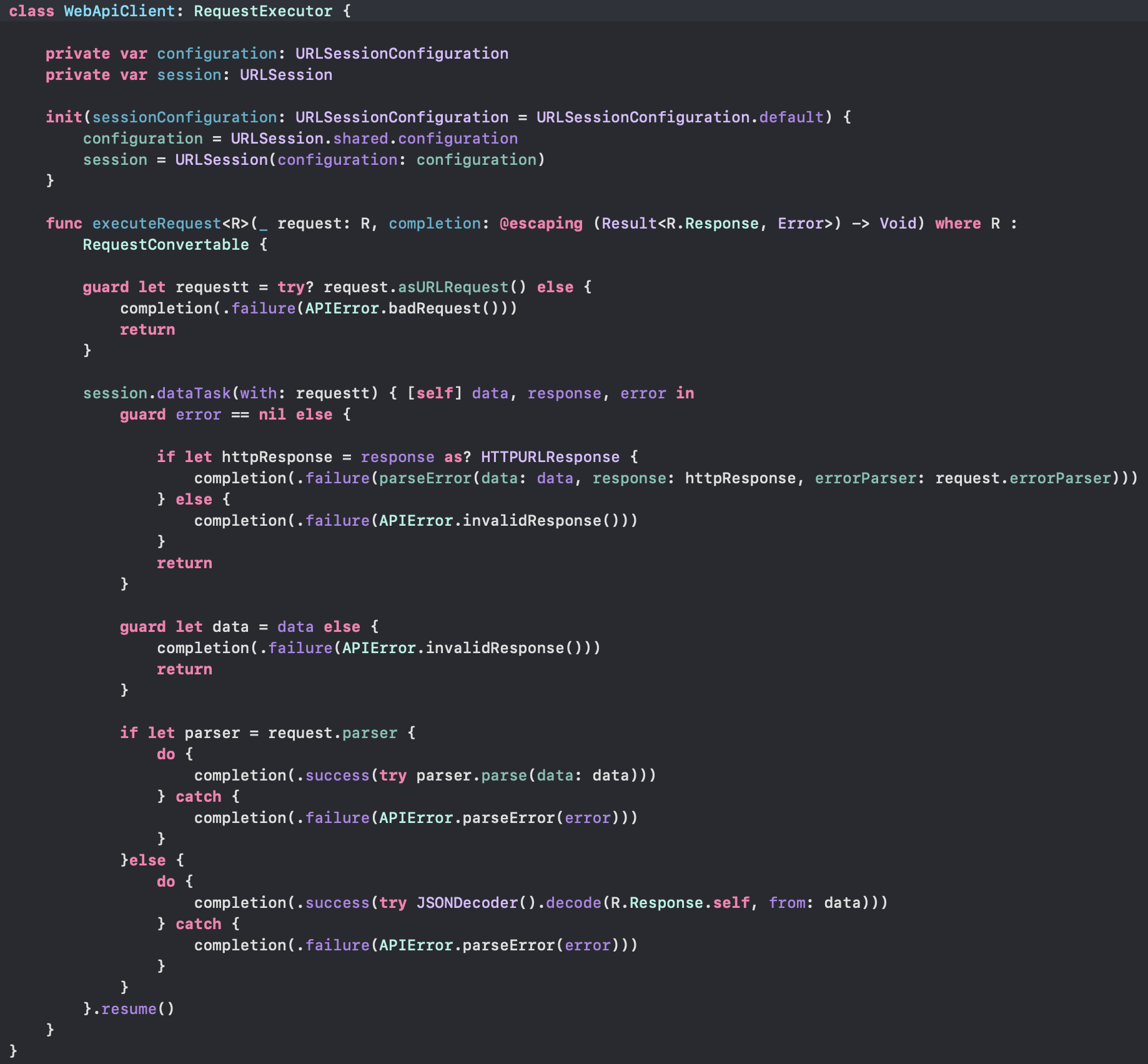

The code is self-explanatory as it just implements the RequestExecutor protocol.

The function executeRequest is where all the magic happens. It takes the request type and converts it to URLRequest through RequestConvertible to use it with the newly created dataTask. You will notice how conveniently the validations of using the right parser and response type are kept with Request type and the only job of the request executor is to make network calls.

And that is what a scalable network layer will take to work.

But wait, where is Combine ?

Yes, It is the time to start over and Combine.

We’ll start by refactoring the RequestExecutor protocol, As it was the only protocol in our API abstraction that makes use of Call back (closures).

Link to Combine’s Network layer Protocols

This is what RequestExecutor refactor is all it takes to be used for Combine.

The changes:

- We removed the call back parameter instead there is a return type of AnyPublisher Generic over Request’s response and Error.

- Refactoring also saw use of Generic where clause for constraining R type with RequestConvertable. but it’s just a syntactic change induced to brush up the use of where clause.

Note that there is use of AnyPublisher which is common in Combine with its own benefits. But Why not use the Publisher specifically available URLSession.DataTaskPublisher<T, Error> over DataTask. The reason for not using DataTaskPublisher or any other specific publisher is to not make our executeRequest function tightly coupled with the implementation. We’ll talk more on AnyPublisher in a while.

That is all with the refactoring part of Protocols, The next step is to refactor the actual implementation of these abstractions.

Let’s now see the simple use of URLSession.DataTaskPublisher for making Web api requests and then we’ll add it to our Refactoring.

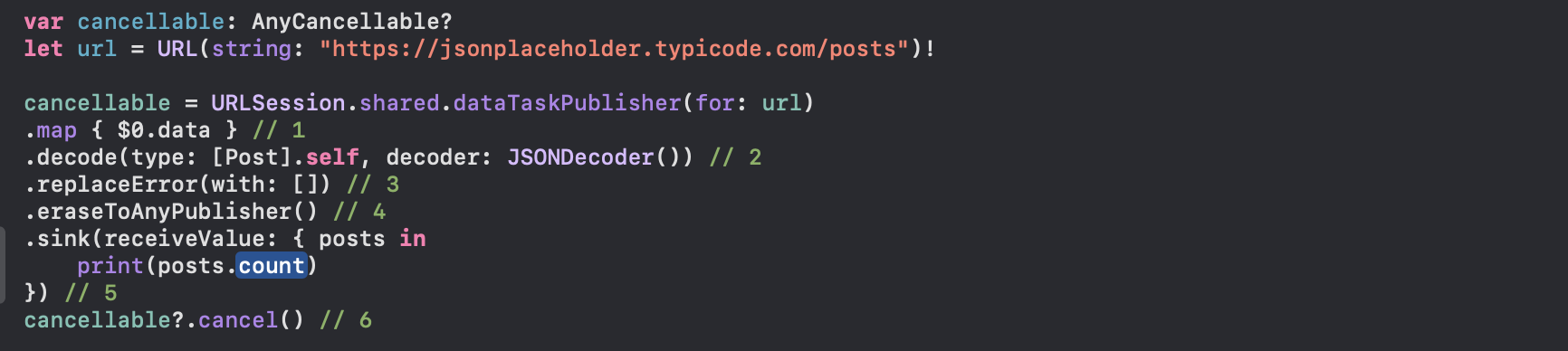

Data task with Combine:

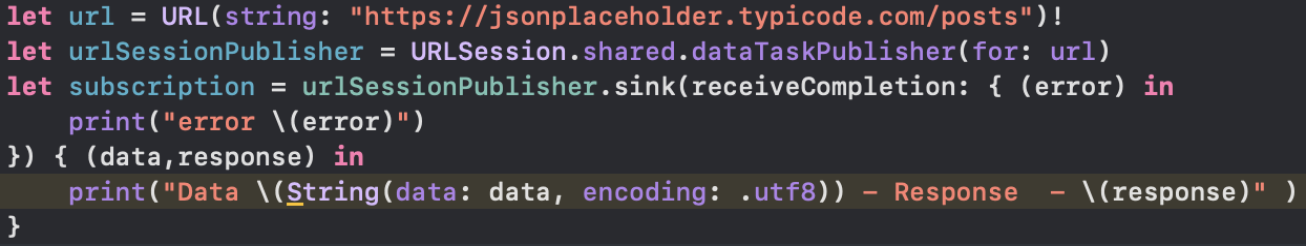

Data task in Combine

This pipeline defines the urlSessionPublisher and subscribe it using sink subscriber. That is the most basic form of combine’s pipeline for DataTask.

This does the job of making requests over the network and fetching us the data, url response objects. But using it in our implementation is still a long way.

Let’s evolve the current pipeline using some awesome operators.

This is much evolved now, let’s talk about these updates to our pipeline.

- We are using map operator to transform the output (data: Data, response: URLResponse) of urlSessionPublisher to data ignoring the response.

- decode is used to load the data and attempt to parse it. Decode can throw an error itself if the decode fails. If it succeeds, the object passed down the pipeline will be the struct from the JSON data.

- In case of any error we handle it here using replaceError, as we don’t want our pipeline (Subscription) to finish with failure. We may also use the catch operator instead of using replaceError. But it is to mind that even with replaceError or catch operator if there is an error in the stream, it will end up by finishing the pipeline.

- Using eraseToAnyPublisher() to expose an instance of AnyPublisher to the downstream subscriber, rather than this publisher’s actual type. This form of type erasure preserves abstraction across API boundaries, such as different modules. When you expose your publishers as the AnyPublisher type, you can change the underlying implementation over time without affecting existing clients.

- As we handled the error using replaceError on step 3, this closure will get invoked with an Array of Posts data or an empty array in case of error.

- It shows how we can cancel a subscription. (Comment it if testing the code)

This is much better. But what about if we don’t want to replace the error with empty data. Let’s fix that.

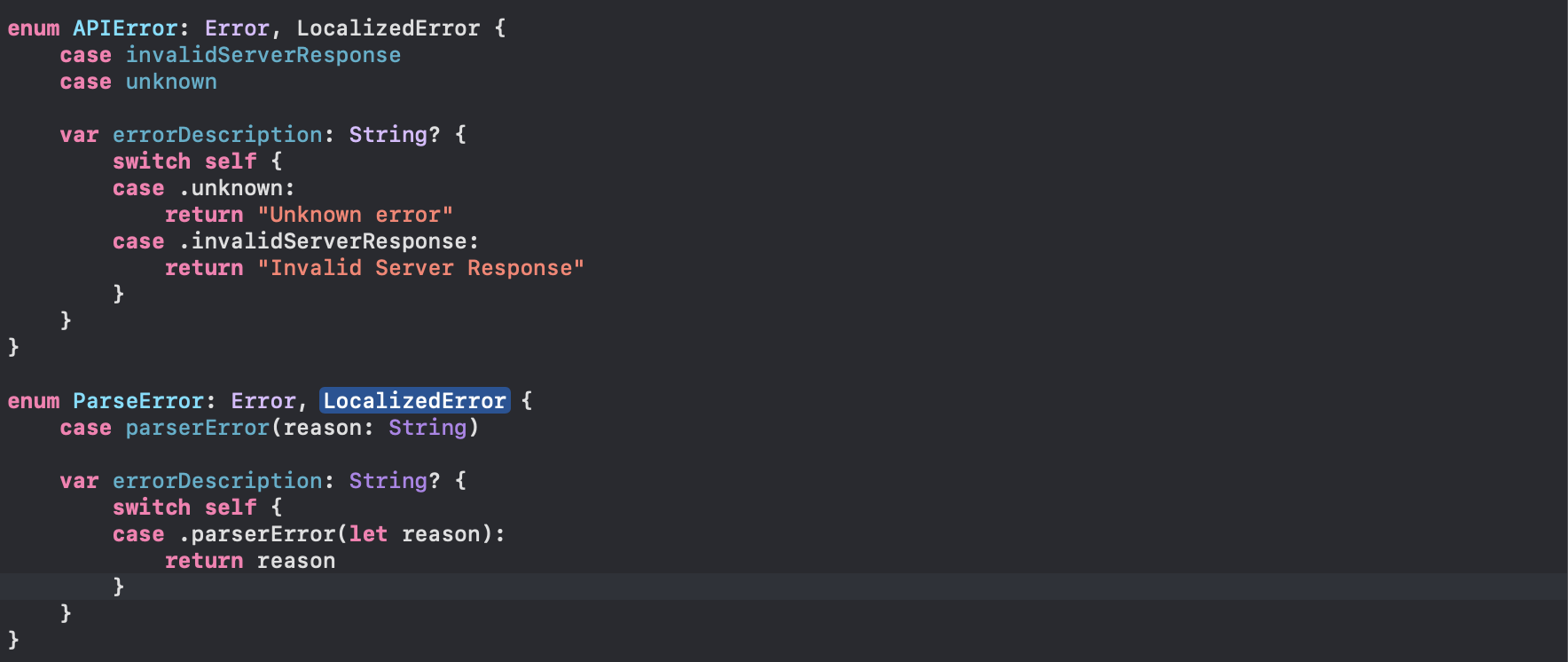

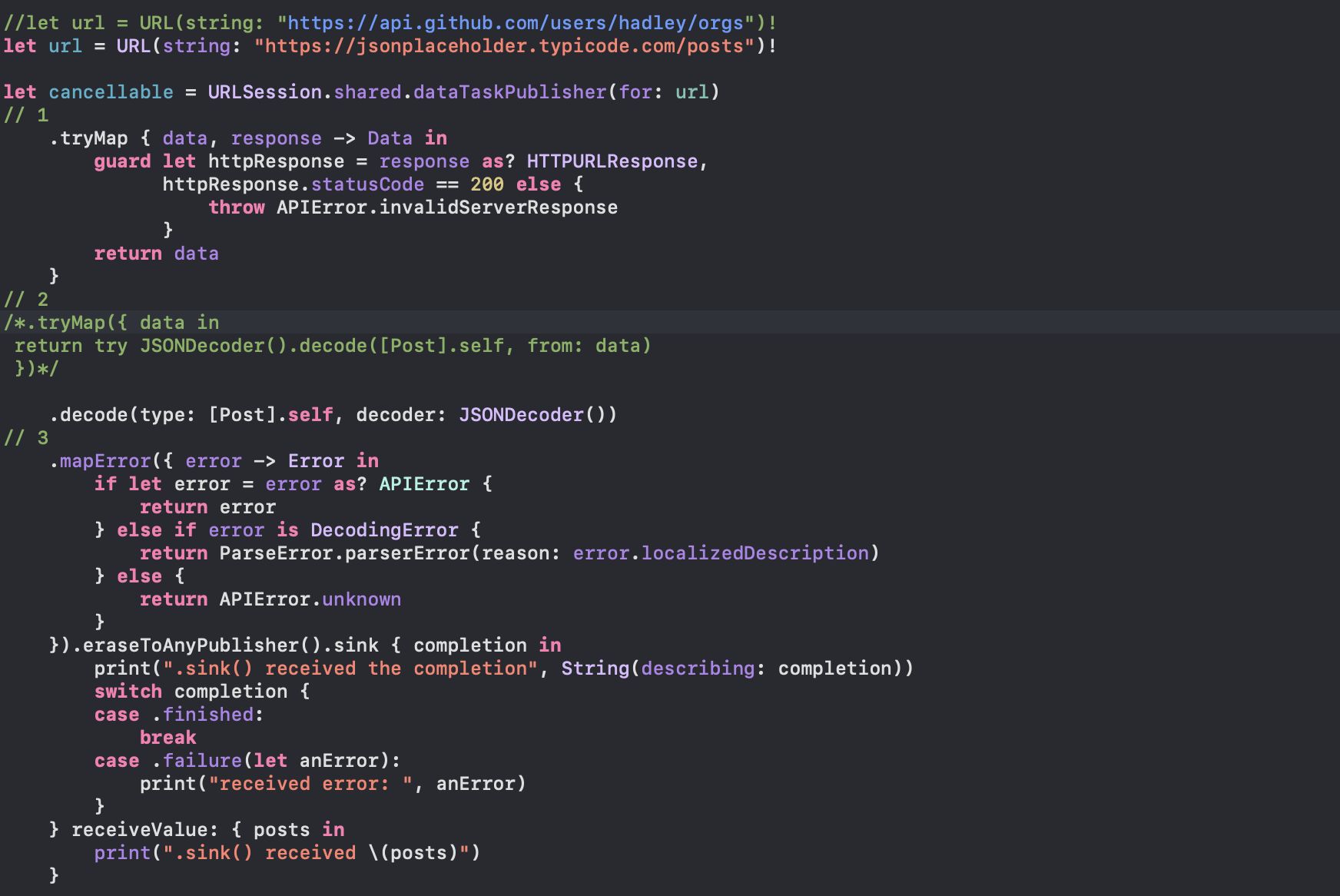

Raising Error and Stricter Network request processing stream:

Here we are going to update the above-created combine network pipeline to raise errors and normalize them to errors that our Application will understand.

Combine has a family of try-prefixed operators, which accept an error-throwing closure and return a new publisher. The publisher terminates with a failure in case an error is thrown inside a closure. Here are some of such methods: tryMap(_:), tryFilter(_:), tryScan(_:_:)

Use of tryMap over map and use of mapError blocks are the main update to our network request processing pipeline.

- With the tryMap operator, the response from dataTaskPublisher is immediately inspected and we throw an error of desire if the response code is not in range.

- Same as the above pattern we decode the response, but there is a commented part, we may use the tryMap closure with normal Decoding and throwing error of our choice if any.

- With mapError we convert any raised error types down into a common Failure type of APIError or ParseError

With the above changes to our pipeline, we achieved the expected error handling.

Wooo!

That was a lot on Combine’s network data processing pipeline.

With all that knowledge we gain in the last part it’s time to move back on our Network layer Refactoring and apply these.

We’ll go back to our WebApiClient class and implement the refactored RequestExecutor protocol.

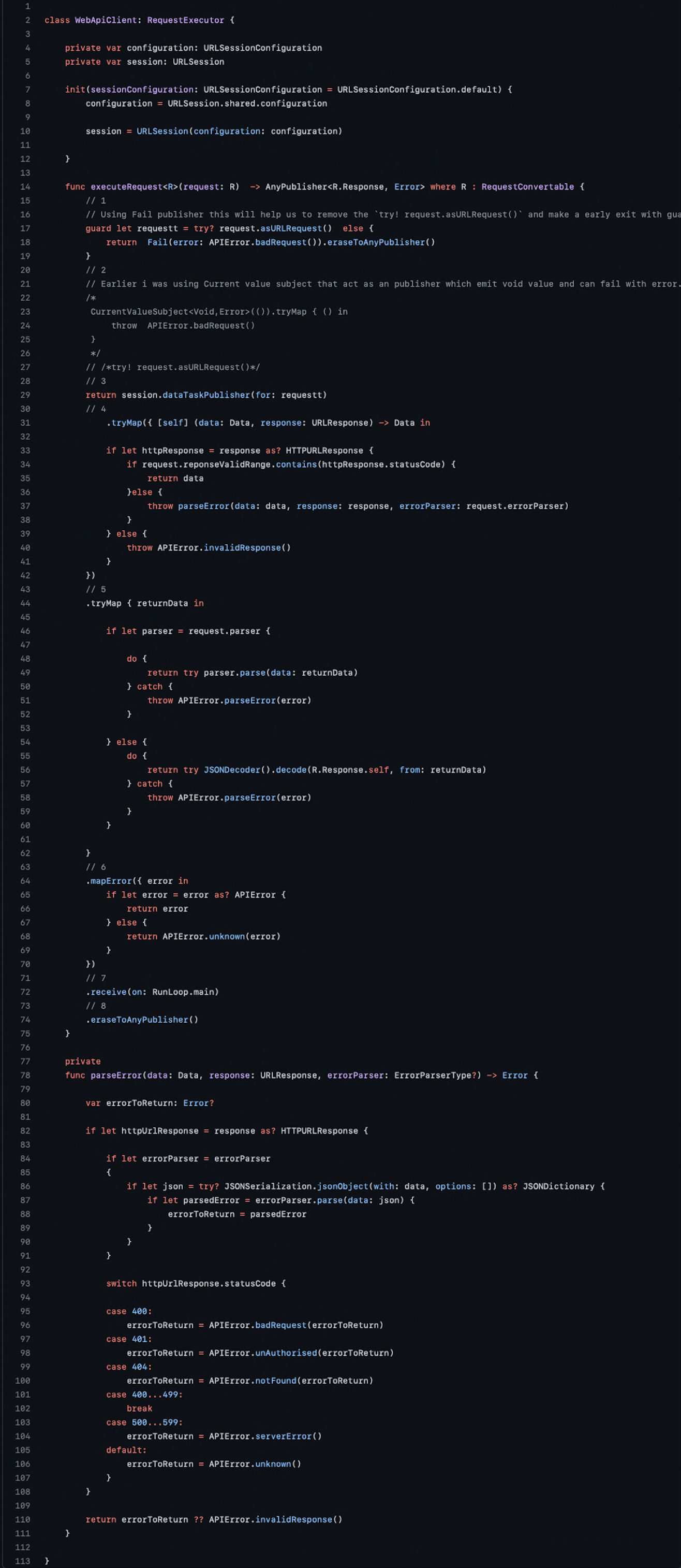

As I have already explained the basic single-shot pipeline using DataTaskPublisher, tryMap, mapError. Our refactored WebApiClient will end up as below.

Link to Combine’s RequestExecutor Implementation

The above code is just a combination of all that we learned in Error handling and using Error Mapping. But before I go on to explain the whole Network layer Pipeline, let me remind you of my promise to explain the use of AnyPublisher type over DataTaskPublisher while refactoring RequestExecutor protocol, Here it is !!

- Used a Fail publisher that immediately terminates with the specified error. And I erase it to any publisher. This is used in case try? request.asURLRequest() fails to create the request and ends up throwing an error. By using AnyPublisher we are able to return any type of publisher even before we create our DataTaskPublisher’s instance.

- Just shows that we may also use the CurrentValueSubject publisher to emit an error.

- After we have the right request instance we need to create a publisher that actually makes the request call. We do so by creating the DataTaskPublisher instance.

- We may have used a map operator, but the mapping operation can throw an error, so we use tryMap instead. Making use of the tryMap operator allows us to check the response code and if anything goes wrong we throw an error.

- Here again, tryMap is used for using our old parsers that may also end up throwing errors. Provides us with the flexibility to use our custom parser instead of using the Decode operator we saw earlier.

- There may be many types of error we may encounter, So to make them one type that our calling code can easily handle we use mapError as explained above.

- A scheduler that allows us to switch back to the main queue.

- Finally, we erase the publisher to any type.

This brings us to the end of the article but feel free to check out the demo project to learn more about how we end up using this network layer.

Don’t forget to add your api key in WebApiConstants class. Get one from here.

Also check the use of Generics, Protocols and Dependency Injection.