Simplifying Data Engineering: An Introduction to DBT

Introduction

Data is a key asset in today’s business environment, holding great potential for making wise decisions and preserving a competitive edge. However, the road to efficient data management is frequently difficult and time-consuming, especially when dealing with big and varied datasets.

In this first blog post of the DBT series, we will introduce dbt, including its features, benefits, and key components, and discuss how to get started with the tool. Finally, we will suggest some next steps for getting started with the tool.

What is DBT?

Data build tools (dbt) are open-source command-line tools for managing data transformation workflows. Dbt provides a powerful framework for building and managing SQL-based transformations that turn raw data into meaningful insights.

Dbt allows you to transform data by defining SQL models that specify how data should be transformed. Models can perform a variety of transformations, such as filtering, aggregating, joining, and more.

It enables data teams to build, test, and deploy data pipelines in a more automated and scalable way. It is designed to work with SQL-based data warehouses, including Snowflake, Redshift, and BigQuery.

It also includes functionality for testing and documenting such transformations. You can define tests using SQL queries to ensure your transformations work correctly. DBT also provides functionality for documenting workflows, making it easier to understand how data is transformed.

How does DBT work?

DBT defines SQL-based models representing the transformations you want to perform on your data. Each model specifies how raw data from one or more sources should be transformed to produce a final output.

DBT uses a modular approach to building DBT models, meaning that each data transformation is defined in a separate SQL file or “model.” Each model is then compiled into a single SQL file representing a table.

DBT can be run locally on your computer, or it can be run on a cloud-based platform. When you run dbt, it reads your defined models and sources and generates SQL code that performs the defined transformations. Dbt also includes functionality for deploying your transformations to production. You can also use DBT to create automated workflows that run your transformations and tests on a schedule. You can use dbt to create incremental or full-refresh models that update your transformed data on a regular basis. Let’s have a quick look at how both of these approaches work in DBT.

- Full-refresh models – DBT introduces Full-Refresh Models as a method to rebuild the target dataset entirely from the source every time it runs. This approach ensures data integrity, making it suitable for data sources undergoing frequent structural changes.

- Incremental models – An Incremental Model in DBT updates only the changed or new records in the target dataset since the last run. This approach minimizes redundant work, making it suitable for scenarios with large datasets or frequently updated data sources, where efficiency is crucial.

Benefits of using DBT

DBT offers several advantages, making it a valuable tool for managing and transforming data. These advantages include:

- Standardization: Dbt provides a consistent way to structure and test data transformations, which helps create a consistent and reliable data management system. This makes maintaining and scaling your dbt project easier as your data grows and changes over time.

- Version control: Dbt allows a project to be version-controlled using Git, just like your code. This means that changes to your data management system can be tracked and rolled back if necessary, providing greater control and visibility.

- Collaboration: Dbt’s project-based approach enables multiple team members to collaborate on the same data management system. Each team member can work on their own branch of the project, and changes can be merged and tested before being deployed to production.

- Testing: Dbt has an in-built testing framework that allows you to test your data transformations and ensure that they are working correctly. This helps to identify errors early and reduces any risk of data quality issues.

- Automation: Dbt can be automated using tools like Airflow, allowing you to schedule and orchestrate your data management system.

- Scalability: Dbt is designed to be scalable, making it easy to handle large volumes of data and complex transformations. It can be run on distributed computing platforms like Snowflake and BigQuery, allowing you to process data quickly and efficiently.

How to Get Started with DBT

To get started with DBT, you can follow these steps:

- Install dbt: The first step is to install dbt on your machine. This can be done using pip, the Python package installer. Once dbt is installed, you can begin creating your first project.

pip install dbt

- Initialize a DBT project: To create a new dbt project, navigate to a new directory in your terminal and run dbt init. This will create a new directory structure with the necessary files to get started with DBT.

dbt init my_dbt_project

- Configure your DBT project: Once your project is created, you’ll need to configure it to connect to your data warehouse. This can be done by editing the profiles.yml file, which contains information about your data warehouse and any other connections your project may require. Here is an example of how a profiles.yml file looks like:

dbt_transform_profile: outputs: dev: account: *******.us-east-2.aws database: TRANSFORM_DB password: ********** role: TRANSFORM_ROLE schema: TRANSFORM_SCHEMA threads: 1 type: snowflake user: data_engineer_1 warehouse: DBT_TRANSFORM_WH target: dev

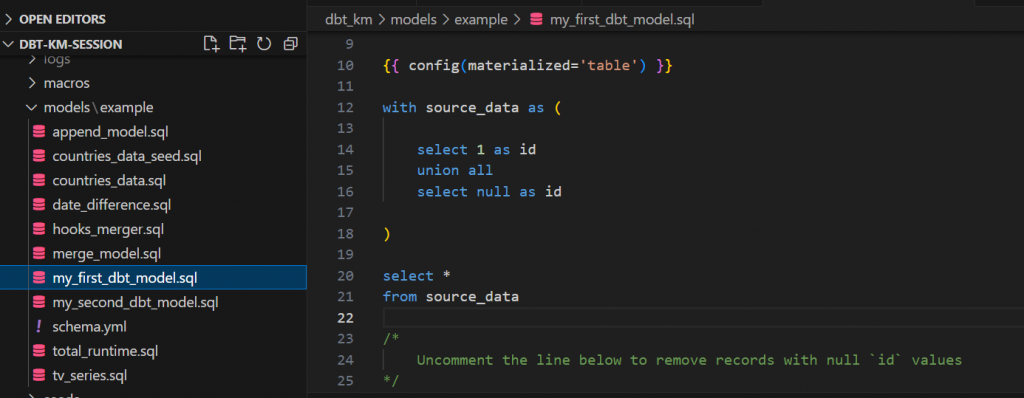

- Define your data models: With your project configured, you can begin defining your data models using SQL. DBT allows you to write SQL in .sql files that define your data models and any associated tests. In the example below, you can see we have added a few SQL models and a sample model to the model’s directory of the project.

models directory and sample model

- Run DBT: Once you’ve defined your data models, you can run dbt to execute them against your data warehouse. This can be done using the dbt run command, which will execute the SQL in your .sql files and create the necessary tables and views in your data warehouse.

dbt compile # Compiles the project dbt run # Runs the data transformations

- Test your models: After running dbt, you can use the dbt test command. This will run any tests defined in your .sql files and ensure your data models work correctly.

dbt test # Executes tests Here is an example of tests in dbt:

version: 2 models: - name: merge_model description: "Implementing DBT merge strategy" columns: - name: ID tests: - not_null - unique - name: first_air_date description: "Provides the first date of first season" tests: - not_null - name: IN_PRODUCTION tests: - not_null - accepted_values: values: ['TRUE','FALSE']

- Documentation Generation: dbt generates documentation for your data models, making it easy for your team to understand the purpose and usage of each model. Documentation is accessible through a web interface or as static HTML files.

dbt docs generate

- Deploy your models: Finally, you can deploy your data models to your data warehouse, this will create the necessary tables and views in your data warehouse and ensure that your data management system is up-to-date.

Conclusion and Next Steps

This blog post introduces DBT, including its features, benefits, and key components. We have also discussed starting with DBT and working around the models and tests.

If you are new to DBT, we recommend starting with the official DBT tutorial, which provides a step-by-step guide to building a simple data pipeline[ https://docs.getdbt.com/ ]. Once you are comfortable with the basics, you can explore more advanced features of dbt, such as macros and custom packages.

Overall, DBT is a powerful tool for building and managing data pipelines in a more automated and efficient way. In our next blog post, we will dive deeper into this advanced DBT feature, explore different strategies and best practices, and see examples of real-world use cases and datasets.

Happy dbt-ing, and see you in the next blog post on Incremental Models in DBT!!

Co-authored by: Suyog Nagaokar and Dipti Dwivedi