How to access your AWS Secret Manager secrets in an EKS cluster

How to access your AWS Secret Manager secrets in an EKS cluster

This blog will show how we can use AWS secret manager inside AWS EKS pods.

You all know that almost every application has sensitive data like usernames and passwords. To secure such sensitive data, AWS provides two ways to store and manage application configuration data:

- Secret Manager

- Parameter Store

Both services allow encryption, CloudFormation, and versioning, But Parameter Store is not useful if secrets are centrally managed from another AWS account. If you want encryption, key rotation, and cross-account access of secrets, then you can go with AWS Secret Manager.

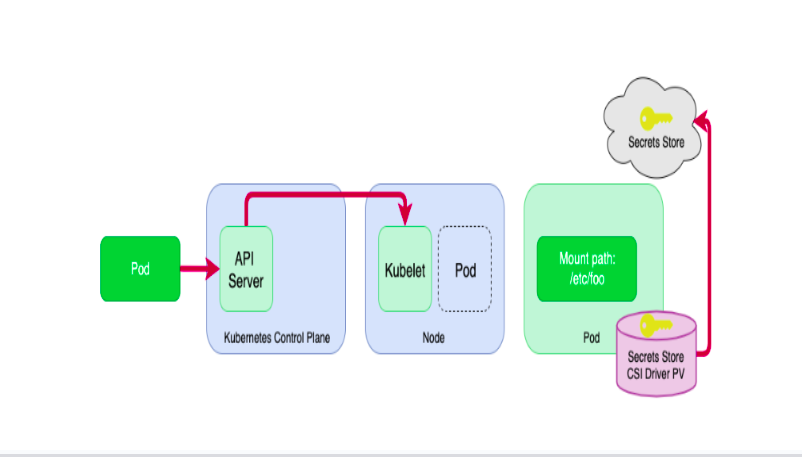

Flow Diagram

- The user makes a pod creation request to API server

- API Server requests Pod Scheduler in Control Plan to Schedule Pod to Node

- Kubelet running on the node gets the request to create a pod. Kubelet scans the pod manifest file and finds the volume to be mounted using the CSI driver and calls the Secret CSI driver to mount the volume in the pod.

- Then CSI Driver invokes the ASCP (AWS Secrets and Config Providers) to call Secrets Managers to get the secrets by API Call and ACSP uses the SecretProviderClass manifest file to find which secrets to be loaded and create the secrets files in the mounted volume.

Prerequisites

- Existing EKS Cluster

- Your secret is stored in the secret manager

- AWS CLI, eksctl, helm, and kubectl installed

Setup Guide

- Install CSI Driver

| helm repo add secrets-store-csi-driver \

https://kubernetes-sigs.github.io/secrets-store-csi-driver/charts helm install -n kube-system csi-secrets-store \ –set syncSecret.enabled=true \ –set enableSecretRotation=true \ secrets-store-csi-driver/secrets-store-csi-driverAA |

- Install ASCP Plugin

| kubectl apply -f https://raw.githubusercontent.com/aws/secrets-store-csi-driver-provider-aws/main/deployment/aws-provider-installer.yaml |

- Check the DaemonSet for ASCP and CSI Driver in Kube-system Namespace

Note: Above two steps will deploy the daemon set for ASCP and CSI Driver

To check the ASCP daemon set:

| kubectl get daemonsets -n kube-system -l app=csi-secrets-store-provider-aws |

To check the CSI Driver daemon set:

| kubectl get daemonsets -n kube-system -l app.kubernetes.io/instance=csi-secrets-Astore |

- Create an AWS Secret Manager and Note down Secret arn

| aws –region “us-east-1” secretsmanager \

create-secret –name test-ritika \Create an AWS Secret Manager and Note down Secret arn –secret-string ‘{“username”:”foo”, “url”:”hello.com”}’ |

- Create an IAM policy

This policy will be attached to Kubernetes Service Account to allow access to Secret Manager. In place of the red highlighted part below, give the Secret ARN that we have just created.

| {Create an IAM policy

“Version”: “2012-10-17”, “Statement”: [ { “Sid”: “”, “Effect”: “Allow”, “Action”: [ “secretsmanager:GetSecretValue”, “secretsmanager:DescribeSecret” ], “Resource”: “SECRET_ARN” } ] } |

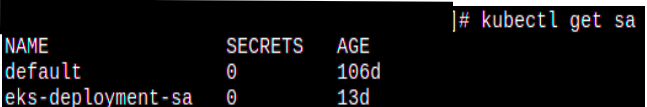

- Create a Service Account and attach the above policy

This Service Account gives your pods access to the Secret Manager with the previously created policy. Provide any name for your service account and add your policy arn in place of “IAM_POLICY_ARN_SECRET”.

| eksctl create iamserviceaccount \

–region=”$AWS_REGION” –name “$ServiceAccountName” \ –cluster “$EKS_CLUSTERNAME” \ –attach-policy-arn “$IAM_POLICY_ARN_SECRET” –approve \ –override-existing-serviceaccounts |

OR

| apiVersion: v1Create an IAM policy

kind: ServiceAccount metadata: name: $ServiceAccountName annotations: eks.amazonaws.com/role-arn: $IAM_POLICY_ARN_SECRET |

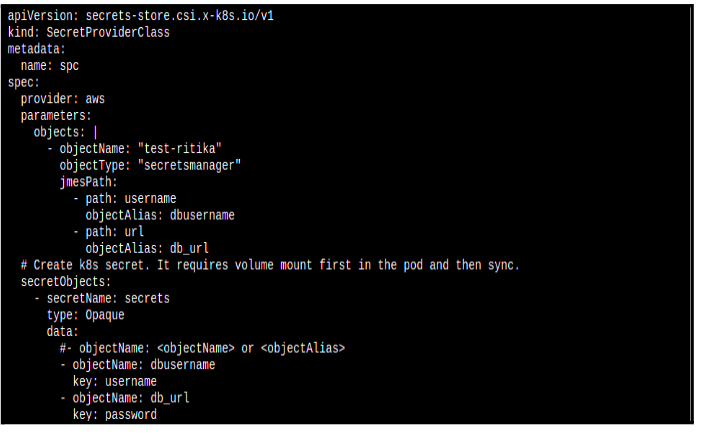

- Create SecretProviderClass

ASCP uses the below SecretProviderClass manifest file to find which secrets to be loaded and creates the secrets files in the mounted volume. To use ASCP, we create Secret Provider Class to provide a few more details of how we will retrieve the secrets from AWS Secret Manager or Parameter Store.

Note: The Secret Provider Class must be in the same namespace as the pod referencing it. You can pass the namespace under the metadata block.

SecretProviderClass.yaml:

[Note: Follow the below indentation as shown in the image.]

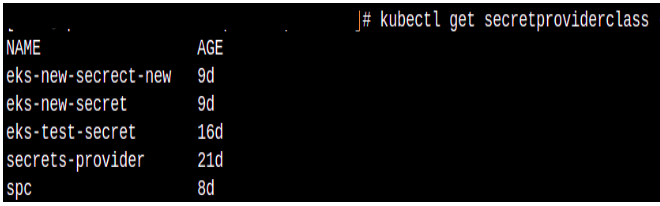

Apply the below command to create secretproviderclass.

| kubectl apply -f SecretProviderClass.yaml |

The output indicates the resource (spa) was created successfully.

| apiVersion: secrets-store.csi.x-k8s.io/v1

kind: SecretProviderClass metadata: name: spc spec: provider: aws parameters: objects: | – objectName: “test-ritika” objectType: “secretsmanager” jmesPath: – path: username objectAlias: dbusername – path: url objectAlias: db_url # Create k8s secret. It requires volume mount first in the pod and then sync. secretObjects: – secretName: secrets type: Opaque data: #- objectName: <objectName> or <objectAlias> – objectName: dbusername key: username – objectName: db_url Key: password |

- spc: name for a secret provider class

- aws: name of cloud provider

- test-ritika: name of aws secret manager

- secretsmanager: name of secret store aws service

- username,url: name of secret manager variables which you want to store in a pod as an env variable

- dbusername,db_url: alias name for secret manager variable through which we can call env variable in pod.

- secrets: name of k8s secrets, this will CSI driver automatically create after applying deployment.yaml

- Under data block: dbusername: Alias name and username: key name of secretsCreate Deployment manifest :

| kind: Service

apiVersion: v1Create Deployment manifest : metadata: name: nginx-deployment labels: app: nginx spec: selector: app: nginx ports: – protocol: TCP port: 80 targetPort: 80 — apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: serviceAccountName: $ServiceAccountName volumes: – name: secrets-store-inline csi: driver: secrets-store.csi.k8s.io readOnly: true volumeAttributes: secretProviderClass: “spc” containers: – name: nginx-deployment image: nginx ports: – containerPort: 80 volumeMounts: – name: secrets-store-inline mountPath: “/mnt/secrets-store” readOnly: true env: – name: rds_user valueFrom: secretKeyRef: name: secrets key: username – name: rds_url valueFrom: secretKeyRef: name: secrets key: password |

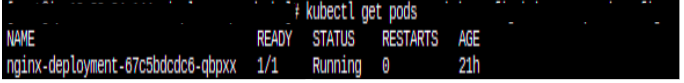

Create a pod with the below command

| kubectl apply -f deployment.yaml

kubectl get pods |

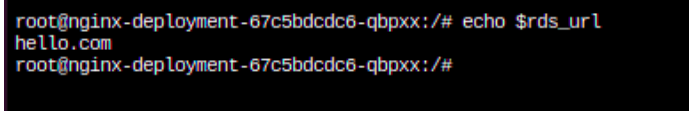

- After applying the above manifest, we should be able to get our AWS Secrets’ content directly from env var rds_username and rds_url:

| kubectl exec -it <NAME OF THE POD> — env | grep rds_username

kubectl exec -it <NAME OF THE POD> — env | grep rds_url |