Bringing Cloud-Native Power On-Prem: Deploying the Mirantis Ecosystem in Samsung’s Highly Isolated Environment.

Introduction

In today’s enterprise IT world, container orchestration often feels like magic. It takes a bunch of servers and makes them behave like a single, well-oiled machine. But what happens when you don’t have the cloud at all?

That was exactly the challenge we faced: building a production-grade Kubernetes platform inside Samsung’s fully air-gapped, on-premises environment.

As a DevOps engineer, I knew this wasn’t just about spinning up infrastructure—it was about proving that our cloud-native philosophy could still thrive without internet access. We wanted a setup that was reliable, secure, and scalable, entirely on-premises. That’s when the Mirantis ecosystem came into play. With Mirantis Kubernetes Engine (MKE), Mirantis Secure Registry (MSR), Mirantis Container Runtime (MCR), and MinIO for storage, we stitched together a complete, enterprise-ready solution.

Why Mirantis?

We didn’t choose Mirantis on a whim. In a world with no AWS, no GCP, and no Azure, we needed a stack that could stand tall on its own. Something that gave us:

• Fully integrated Kubernetes orchestration

• Reliable and controlled image storage

• A production-grade runtime

And most importantly, something that didn’t need to “phone home” to the cloud.

MKE, MSR and MCR satisfied all of these needs and most importantly satisfied them without any global communication.

With MKE, you get production-grade Kubernetes orchestration with high availability and security built in.

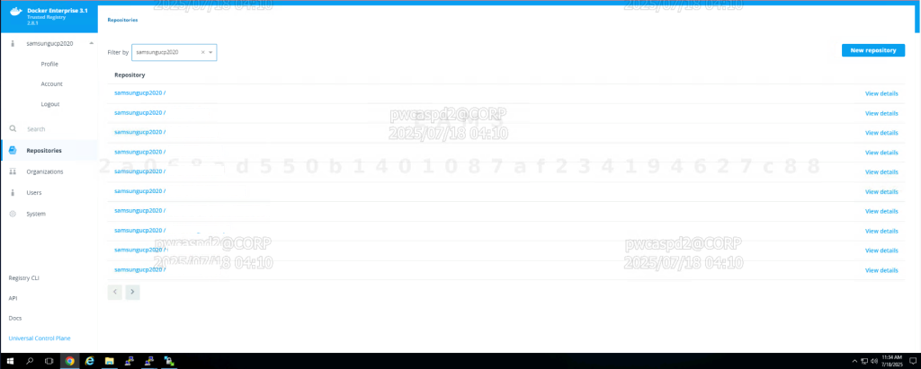

MSR is a reliable and scalable registry that integrates closely with MKE, with full control of image manipulation.

MCR is a secured variant of the Docker engine, providing the runtime compatibility and performance required.

To round out this stack, we placed MinIO for object storage—a high-performance, S3-compatible file storage locally for logs, backups, and app-level assets.

Preparing in an Isolated System

Here’s where things got tricky. In a cloud-connected world, deploying Kubernetes is as simple as a few commands. In an air-gapped setup? Not so much.

We had to rethink our entire approach. On an internet-connected machine, we downloaded all the required packages—MKE, MSR, MCR, supporting files, container images, and tools. Once we had everything, we bundled it neatly, validated it, and made sure every single dependency was in place.

Then came the fun part—moving it into the isolated network. With the Samsung infra team, we sometimes used secure USB drives, and other times a temporarily authorized internal portal. It wasn’t glamorous, but it worked. And the best part? Once the files were inside, we had complete control.

We followed a well-defined, strict installation order:

1. Install MCR as the container runtime.

2. Deploy MKE to orchestrate the cluster and establish the control plane.

3. Use MKE’s master nodes to install MSR for centralised image management.

4. Deploy MinIO as distributed object storage for the Kubernetes cluster.

How We Set Things Up.

We created an easy-to-follow hierarchy in case of set-up error – (Posting checklist)

MCR was the first thing to go in, which would be our container runtime.

Imported MKE – that covered cluster management and the control plane.

We leveraged MKE’s master nodes to inject MSR — this gave us one place to manage all of our container images.

Added MinIO, this was my object storage for logs, backups and app data.

This step-by-step approach convinced us that the setup was stable and could run without requiring the internet.

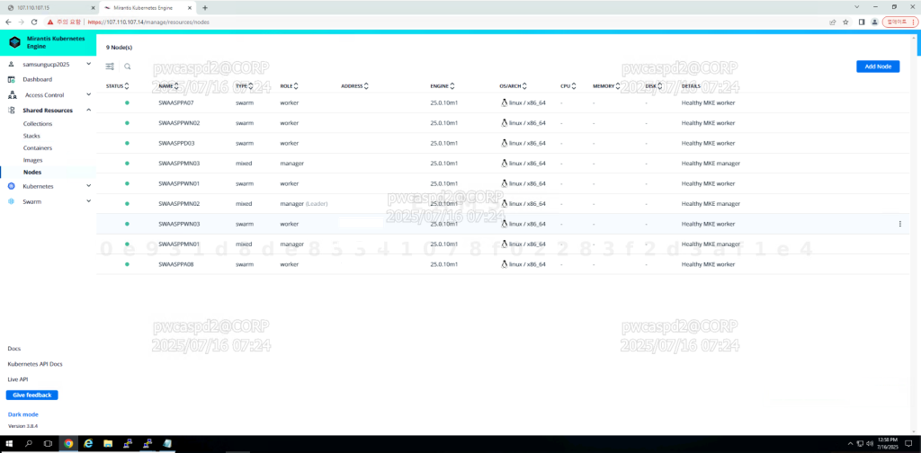

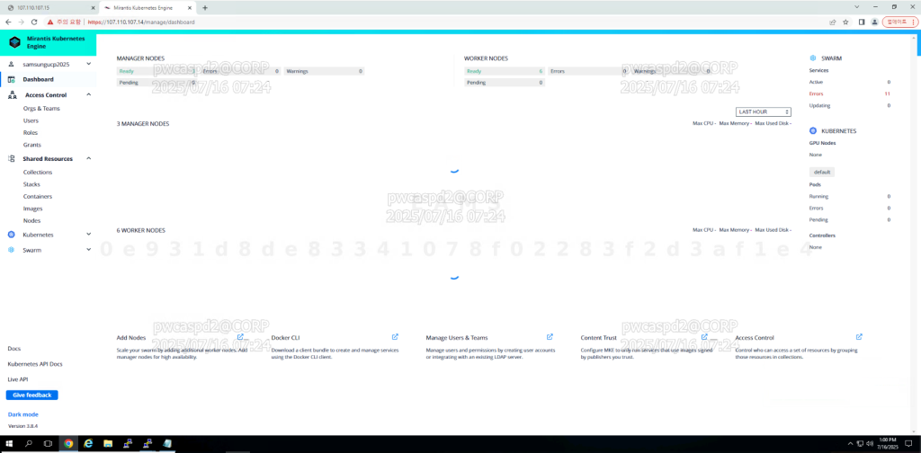

Production Architecture and Node Topology

So what does high availability and easy scalability look like in our production setup?

• 3 MKE Manager Nodes– to ensure the control plane is up all the time.

• 5 Worker Nodes (with all applications executed).

• 3 MSR Nodes – this was for ensuring the registry had backups and could scale when necessary.

• 1 MinIO Cluster – providing scalable, high-performance object storage.

I think this was a good tradeoff between performance and security. When a node failed, MKE’s self-healing resolved any issues relatively quickly and with little to no effect.

Mirantis Node Management UI.

Mirantis Secure Registry (MSR) UI.

MSR REPOSITORY

UI & Observability

One of the coolest things about Mirantis, are the web dashboards for MKE and MSR. These made it easy to:

• Monitor the health of clusters and logs for pods.

• View CPU, memory and disk usage per node.

• Work with namespaces, roles and deployments, without any CLI required.

For such a teammates who wasn’t confident of using CLI to do all these, this helped.

MKE Cluster Overview UI

MKE

Documentation Experience

There is another reason why this deployment was smooth is Mirantis documentation. The guides were:

• Clear and simple to follow.

• Full of working examples.

• Updated regularly.

Despite our unique case (air-gapped system), the docs met our needs for installation, ha design, image processing, and best practices.

Outcome and Takeaways

Combining MKE, MSR, MCR, and MinIO, we were able to provide fully cloud-agnostic, production-grade Kubernetes deployment—100% self-sufficient and fine-tuned for a specific use case. Once setup, the cluster would not be dependent upon the Internet for updates, images, or any post-install activity. It was all in the network.

Now, all the applications are secured and running in their own cluster, running all container images locally versioned through MSR. MinIO is the internal object storage layer for applications and backup. Codes for dashboards and monitoring allow examining workloads, resource usage, and node health in real time, offering smoother and more predictable operations.

The last setup finds the optimum balance—security, flexibility and control, which is exactly how it should be in a restricted enterprise environment.

Key Wins:

• Kubernetes in a 100% air-gapped environment

• On-prem pipeline for images

• High availability across services

• Local object storage with MinIO

• Easy management with web dashboards

Conclusion

This journey demonstrated that running Kubernetes at an enterprise scale does not necessarily mean it must be in the cloud. With Mirantis, we developed a production-grade platform within a completely air-gapped environment – an achievement that many believed too complex and restrictive.

MKE, MSR, MCR and MinIO are great because we got exactly what we wanted – security, scalability and reliability – all without having to depend on external connectivity. From image management to storage, everything remained local, and transactions proceeded in fast, secure operations.

For enterprises in a similar bind, Mirantis does it again: demonstrates how cloud-native can still work on premises. It’s not a mere end-run — it’s evidence that agility and compliance can work hand in hand.

In other words, Mirantis helped us bring the cloud home.