How Amazon MSK Helped Us Stop Babysitting Kafka

Introduction

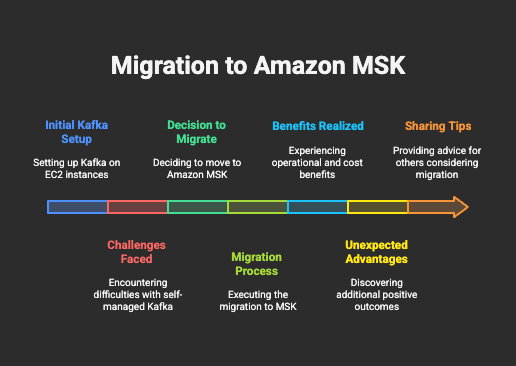

For years, we have used Kafka in the Data Centre, then we moved to AWS and started using EC2 to run Kafka. However, the headaches increased along with our usage. We began to feel as though we were spending more time managing Kafka than creating anything of value due to broker upgrades, Zookeeper problems, imbalanced partitions, and cross-AZ data transfer charges.

migration to aws msk

Therefore, we tried Amazon MSK to see if it could truly make life easier rather than just serve as a drop-in replacement. Spoiler alert: It did. However, it also took us by surprise in unexpected ways. If you want to know how we migrated from Kafka on EC2 to AWS MSK, check out this blog. In this blog, I’ll briefly discuss the benefits of migrating to AWS MSK. Let’s get started!

Why We Migrated to AWS MSK?

Our aim was simple:

- Stop managing Kafka manually

- Maintain full access to Kafka APIs (no weird wrappers)

- Improve stability and, most importantly, reduce costs.

MSK ticked all the boxes. It handles broker provisioning, patching, and Zookeeper (yes, still required for now). We didn’t have to change our producers or consumers at all. But what stood out was what we didn’t expect.

What Surprised Us (In a Good Way)

1. Rack Awareness = Real Cost Savings

When we enabled rack awareness in MSK, our EMR consumers started pulling data from brokers in the same AZ. That meant less cross-AZ traffic, and that meant lower bills. We saved close to $100 a day just from that one configuration. It was easy and wild at the same time. Most people miss this. It’s buried in the settings, but it makes a huge difference. Check out this blog to learn how we enabled rack awareness.

2. CloudWatch Metrics Helped

We’ve all seen clusters of random metrics and ignored them. But we took the time to set up dashboards using CloudWatch and OpenSearch logs, and it paid off. We tracked things like:

- Consumer lag (which helped us spot slow jobs early)

- Partition imbalance

- Broker CPU, memory, network, and disk usage

cloudwatch_dashboard

One dashboard helped us catch a misconfigured topic that was logging way too much and eating up storage. We wouldn’t have known otherwise.

3. Custom Configurations Without the Mess

We were used to SSHing into Kafka nodes to tweak config files and restart brokers in a rolling restart manner. With MSK, we didn’t have to. It lets you apply custom Kafka configurations through the AWS console or API. We used this to:

- Reduce the retention period for Kafka topics

- Set compression types (Snappy worked best for us)

- Adjust batch settings for better throughput

- Enabled Rack awareness

msk_configuration

It felt like managing Kafka, without being on call for every little thing.

4. Security Improvements

- Encryption: In-transit and at-rest encryption feature.

- IAM authentication: Supported, but we stayed on plaintext + SASL for now

- Private connectivity: Used transit gateway for connectivity from multiple VPCs, but AWS PrivateLink is also available for stricter security

Comparison (Kafka on EC2 vs AWS MSK)

| Component | Kafka on EC2 | AWS Managed Streaming For Apache Kafka |

| Cross-AZ traffic | High | No Data Transfer Cost between brokers |

| Broker maintenance effort | Manual patching | Handled by AWS |

| Monitoring and Logging setup | Custom, flaky | Integration with cloudwatch and opensearch. |

| Brokers/Zookeeper failures | Frequent | Stabilized |

| High Availability | Needs multi-AZ setup and manual tuning | Built-in multi-AZ support |

A Few Tips If You’re Starting with MSK

- Use IAAC like Terraform or Cloudformation if you’re managing MSK clusters. Saves time and reduces mistakes.

- Tag everything. Seriously. Clusters, security groups, and even configurations, if possible. It helps later.

- Enable encryption by default. MSK makes it easy, so no reason not to.

- Keep an eye on the Brokers and Zookeeper connections. Yes, AWS manages ZK—but if your app misbehaves, you’ll still feel it.

- Set up dashboards and logging from Day 1 — you’ll thank yourself later.

Conclusion

Amazon MSK didn’t just make Kafka easier—it made it better. We moved from firefighting to focusing on building real data pipelines. It’s not perfect. Cold starts, config propagation delays, and pricing quirks exist. But overall, it’s been one of the most impactful moves we’ve made in our data platform.

If you’re still running Kafka the old-school way, give MSK a shot. Not because it’s new or shiny, but because it just works. Partnering with a managed cloud services provider like TO THE NEW can help you adopt the right architecture and strategies to unlock these savings and improve your Kafka workloads on AWS. Our AWS Certified Architects and DevOps Engineers are committed to saving you time and resources while enhancing business efficiency and reliability.