Enhancing Workflows with Apache Airflow and Docker

In today’s world, handling complex tasks and automating them is crucial. Apache Airflow is a powerful tool that helps with this. It’s like a conductor for tasks, making everything work smoothly. When we use Airflow with Docker, it becomes even better because it’s flexible and can be easily moved around. In this blog, we’ll explain what Apache Airflow is and how to make it work even better by using Docker.

Understanding Apache Airflow

What is Apache Airflow?

Apache Airflow is an open-source platform designed for scheduling and monitoring workflows. It enables users to define, schedule, and execute tasks, making it a valuable tool for managing data pipelines, ETL processes, and more.

Key features of Apache Airflow include:

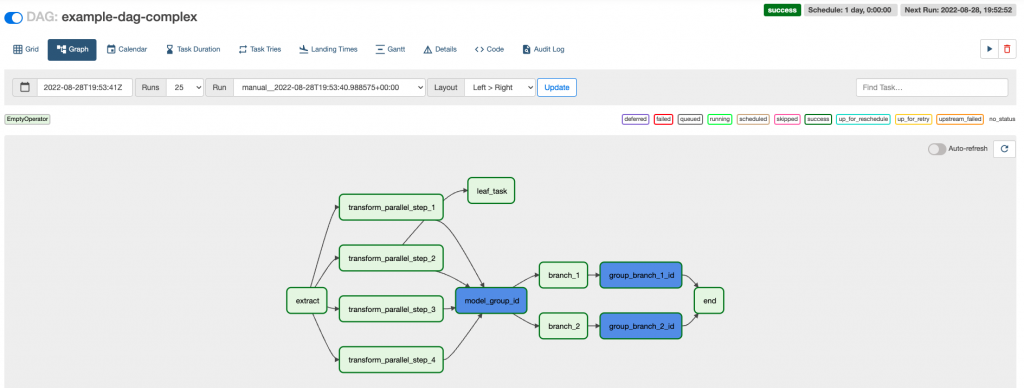

- DAGs (Directed Acyclic Graphs): Workflows are represented as DAGs, where nodes are tasks, and edges define the sequence and dependencies between tasks.

- Task Dependency Management: Airflow allows you to define task dependencies, ensuring tasks run in the desired order.

- Dynamic Workflow Generation: Workflows can be generated dynamically based on parameters or conditions.

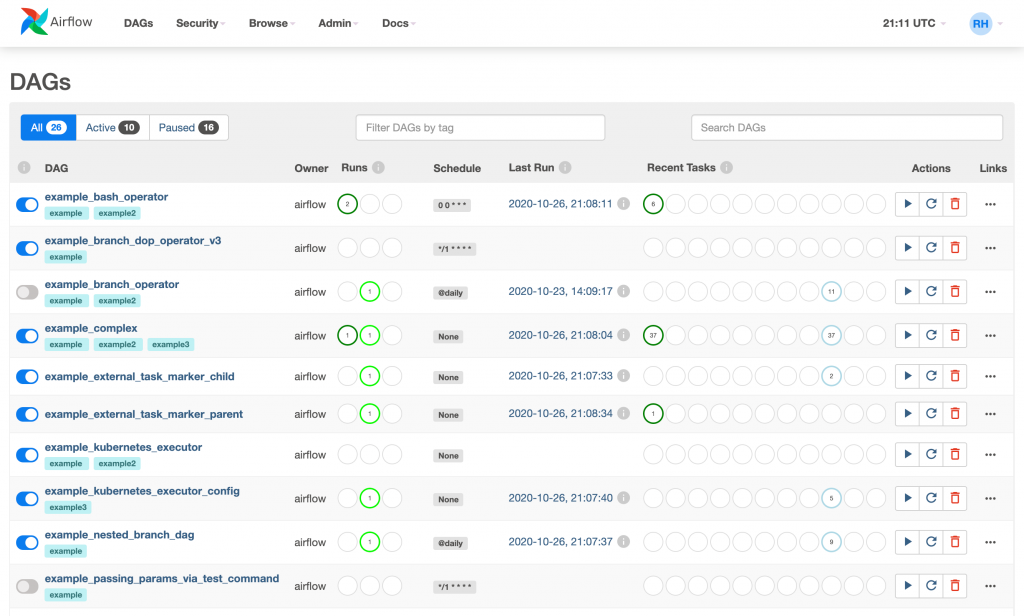

- Rich UI: Airflow provides a web-based UI for easy monitoring, scheduling, and managing workflows.

- Extensible: It’s highly extensible, allowing you to integrate with various systems and tools.

How Does Apache Airflow Work?

Apache Airflow operates on a master-slave architecture. The core components include:

- Scheduler: The scheduler schedules workflows based on defined DAGs, ensuring tasks run at the right time.

- Workers: Workers are responsible for executing tasks. They pull tasks from the scheduler’s queue and run them.

- Metastore Database: Airflow uses a database (typically PostgreSQL) to store metadata, including task status, DAGs, and configurations.

- Web Interface: Airflow provides a web UI for workflow monitoring and management.

Dockerizing Apache Airflow

Dockerizing Apache Airflow means putting it inside Docker containers so that it can be easily moved and used anywhere. Here’s how to Dockerize Apache Airflow:

Step 1: Set Up Docker Environment

Ensure you have Docker installed on your system. You can download and install it from the official Docker website.

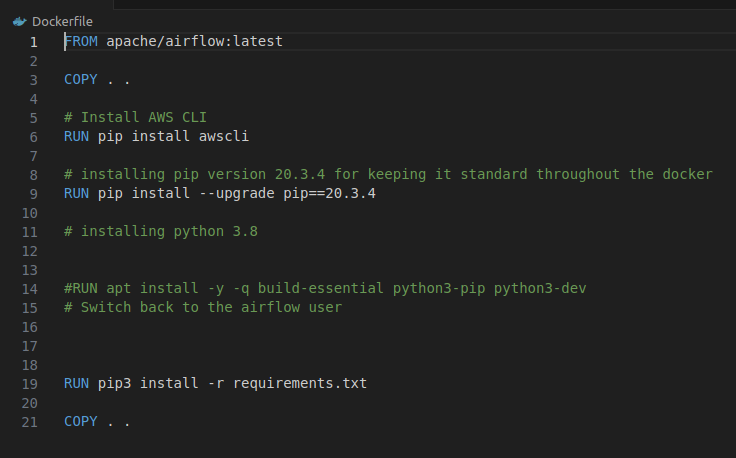

Step 2: Create a Dockerfile

Create a Dockerfile in your Airflow project directory. Here’s a minimal example:

Step 3: Build the Docker Image

Navigate to your project directory and run the following command to build the Docker image:

- docker build -t my-airflow.

This command creates a Docker image named “my-airflow” based on the Dockerfile in your project directory.

Step 4: Create Docker Compose File

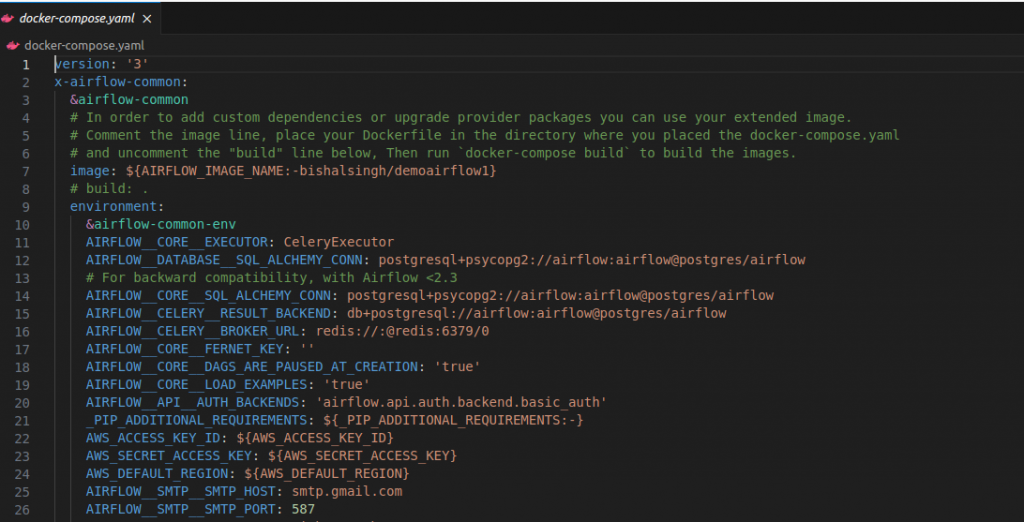

Docker Compose simplifies managing multi-container applications. Create a docker-compose.yml file with the following content:

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.7.1/docker-compose.yaml'

This configuration defines a service named “airflow” based on the “my-airflow” Docker image. It maps port 8080 inside the container to port 8080 on your host machine.

Step 5: Start Airflow in Docker

Run the following command to start Airflow within Docker containers:

- docker-compose up

This command starts the Airflow web server, and you can access the Airflow UI by visiting http://localhost:8080 in your web browser.

Difference between Apache Airflow with Docker and without Docker

Apache Airflow without Docker:

Direct Installation: If you don’t use Docker, you usually install Apache Airflow directly on your computer or in a special environment. This means you have to deal with Airflow’s requirements, settings, and setup on your own.

Dependency Management: Managing dependencies for Airflow components and tasks can be more challenging without Docker. You might need to manually install libraries, dependencies, and Python packages required for your workflows. This can lead to compatibility issues and version conflicts.

Isolation: In Airflow, workflows, and tasks are kept separate using special environments or separate parts of the computer. But Docker containers do an even better job by keeping them apart more effectively and making sure they don’t interfere with each other.

Environment Consistency: Ensuring consistent environments across development, staging, and production can be trickier. You need to manually replicate configurations, libraries, and dependencies, which can lead to inconsistencies and deployment challenges.

Scaling: Scaling Airflow clusters can be more complicated without Docker, as you’ll need to manage additional VMs or servers manually. Scaling up or down might require more manual effort.

Apache Airflow with Docker:

Containerization: Docker allows you to containerize each Airflow component (e.g., Scheduler, Workers, Web UI) and tasks. This means you can package everything your workflows need, including dependencies, configurations, and code, into a single container.

Dependency Isolation: Docker containers keep everything a workflow needs in one separate place. This way, they don’t interfere with each other and work the same way no matter where they run.

Ease of Deployment: Docker images can be easily created and shared, making it simpler to replicate and deploy workflows across various environments. Tools like Docker Compose and Kubernetes make it easy to manage and scale Airflow clusters.

Resource Management: Docker provides better control over resource allocation and isolation. You can specify CPU and memory limits for each container, ensuring that one task doesn’t impact the performance of others.

Version Control: Docker images can be versioned and stored in container registries, making it easier to track changes and roll back to previous versions if needed.

Portability: Docker containers are highly portable, allowing you to run Airflow workflows consistently across cloud providers or on-premises environments.

Conclusion

Apache Airflow makes it easy to manage complex workflows, and when you use Docker containers with it, you can make it work smoothly on different systems. This combo helps organizations handle tasks better and automate data work, making data operations more effective.