Cassandra Migration from Opsworks to AWS SSM Application Manager

Introduction

AWS OpsWorks is a robust configuration management service designed to simplify infrastructure and application management. It automates tasks, streamlines operations, and ensures application reliability by simplifying the provisioning and configuration of resources like Amazon EC2 instances and databases. OpsWorks offers flexibility through support for Chef recipes and cookbooks, allowing users to maintain consistency in deployments and reduce configuration errors. Integration with other AWS services like CloudWatch and S3 enhances monitoring and artifact storage capabilities, empowering users to leverage the full potential of the AWS ecosystem.

With the deprecation of AWS OpsWorks, AWS recognizes the importance of providing users with a suitable alternative, thus introducing AWS Systems Manager (SSM) Manager. This transition not only ensures continued support and functionality but also introduces users to a suite of enhanced features and capabilities.

Problem Statement/Objective/Scenario

Our Cassandra cluster is managed by AWS OpsWorks. However, due to the deprecation of OpsWorks and the cessation of support, we are compelled to seek an alternative solution.

AWS offers an alternative solution: Application Manager , a feature provided by AWS Systems Manager (SSM) Manager which provides robust capabilities similar to OpsWorks, including the ability to execute Chef recipes for automation.

Therefore, our objective is to migrate our Cassandra cluster from AWS OpsWorks to AWS Application Manager, ensuring continuity and efficiency in our infrastructure management.

Solution Approach

To migrate our Cassandra database from OpsWorks to AWS Application Manager, we’ll use AWS provided Python script. This script generates a CloudFormation template, which acts like a blueprint for recreating our OpsWorks setup in Application Manager. We just need to provide some details like our OpsWorks stack name and layer ID.

Once we run the script, it sets up everything we need in Application Manager, including autoscaling groups, launch templates, and recipes to configure our Cassandra cluster. These recipes ensure that our database cluster is set up exactly how we want it. We are using the same recipe which was being used in AWS Opsworks for setting up Cassandra nodes.

This approach makes the migration process straightforward and automated. It ensures that our Cassandra setup in Application Manager matches what we had in OpsWorks, with minimal effort and without the risk of mistakes.

The bare bone infrastructure can be set up using the above approach. Next step is to set up the application. We need to establish the Cassandra cluster on the new infrastructure we’ve created using the Application Manager.

There are several approaches to creating a Cassandra cluster.

- Rolling: One option is to add newly provisioned Cassandra instances to the existing cluster one by one, and then gradually remove the old instances.

- New Datacenter: Another approach is to create a new data center and migrate the data from the old data center to the new one. Once all data has been replicated over, we can decommission the old cluster nodes one by one, resulting in the new data center being provisioned through the Application Manager.

We will proceed with the second approach, which involves creating a new data center and then copying the data from the existing data center to the new one. This method allows for a systematic migration process while ensuring minimal disruption to the overall cluster operations.

Prerequisites

- Ensure that all keyspaces are set up to use the NetworkTopologyStrategy replication strategy by executing below command:

#DESCRIBE KEYSPACE KEYSPACE_NAME;.

- If any keyspaces are not configured this way, update them using the below command as this is essential for a multi-datacenter architecture.

#ALTER KEYSPACE <my_ks> WITH replication = { 'class': 'NetworkTopologyStrategy','<dc_name>':'<replication_factor>' }; - Check if all applications interacting with Cassandra are configured to use local quorum consistency level. If not, update them to use local consistency level. Using simple quorum may result in requests being routed to the new Cassandra cluster, even if it is not fully configured, potentially leading to data inconsistencies.

Solution/Steps by Step Procedure

1. Execute the Python script provided by AWS to begin the migration process and provide the required arguments, including the stack name, layer ID, region, and Git SSH key.

2. Provision the desired number of EC2 instances within the auto-scaling group generated by the CloudFormation template. Wait for the instances to come up. Meanwhile, update the cassandra configuration files as below.

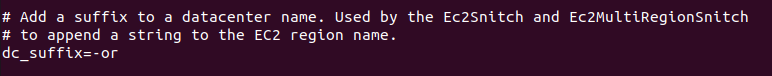

3. Update the cassandra-rackdc.properties file to add suffix in data center name:

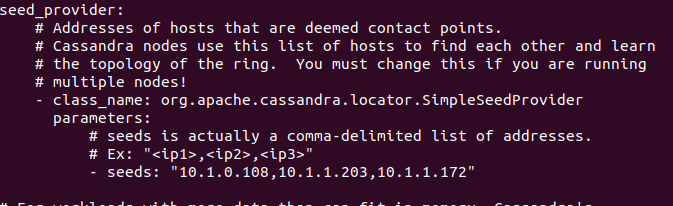

4. Update the cassandra.yaml file by adding one of the seed nodes from the old cluster, keeping the existing IPs of the current datacenter.

5. Once all instances are up and running, proceed to execute and apply the chef recipes from the Application Manager console individually on each instance which would bring up the cassandra cluster eventually.

6. Executing the recipes will result in the creation of a new Cassandra cluster with a different data center name. This change occurs because we have updated the file to include a suffix (“-or” in this case), which in turn modifies the data center name.

7. To add new nodes to the newly created cluster, execute the below command on each node. We can add the below command in the recipes executed in the previous step.

#service cassandra start

8. This step involves enabling write operations for the new data center by modifying the topology to incorporate the new data center into the replication strategy. This can be achieved by executing the following CQL command on all keyspaces, except for the one utilizing the local replication class:

#ALTER KEYSPACE <my_ks> WITH replication = { 'class': 'NetworkTopologyStrategy', '<old_dc_name>': '<replication_factor>', '<my_new_dc>': '<replication_factor>' };

9. Stream the existing data from the current data center to the new data center to fill the gap, as the new cluster is now receiving writes but is still lacking all the past data. This process entails executing the following command on all nodes of the new data center:

#nodetool rebuild old_dc_name.

In case the command fails with a timeout error, run it again.

10. Verify the replication between both data centers by inserting something from the old datacenter’s node and checking it from the new datacenter’s node to ensure that everything is functioning correctly.

11. Once we are done with the testing and decide to go ahead with the decommissioning of the current datacenter, we need to follow the below steps.

Alter the keyspaces so they no longer reference the old data center by executing the following command:

#ALTER KEYSPACE <my_ks> WITH replication = { 'class': 'NetworkTopologyStrategy', '<my_new_dc>': '<replication_factor>' };

12. Finally, to remove the old nodes cleanly, execute the following command on the old nodes one by one: #nodetool decommission.

Debugging

During the migration process from OpsWorks to AWS Application Manager, we faced a challenge installing Chef on the AMI. Currently, the Chef agent is only installed on certain AMIs. Hence, it’s crucial to be cautious when selecting the AMI for your instances. Ensure that you opt for an AMI where Chef can be successfully installed. If the Chef agent is not installed on instances, it will prevent the execution of recipes responsible for bringing up the Cassandra cluster.

Conclusion

In conclusion, migrating our Cassandra database from OpsWorks to AWS Application Manager using the provided Python script streamlines the process by generating a CloudFormation template. This template efficiently recreates our OpsWorks setup within the Application Manager, ensuring a seamless transition with minimal manual intervention and reduced risk of errors. Once the infrastructure is established, we proceed to deploy the Cassandra cluster on the new setup.

Choosing the second approach, which entails creating a new data center and transferring data from the old one, ensures a methodical migration with minimal disruption to cluster operations. This comprehensive strategy facilitates a smooth transition to Application Manager, guaranteeing the continuity and reliability of our Cassandra deployment.

Stay tuned for more such blogs on trending topics.