Optimization of AngularJS Single-Page Applications for Web Crawlers

I am assuming that you’ve seen websites developed on AngularJS. If not, here are a few sites using AngularJS you would be familiar with:

- Youtube

- Netflix

- Weather

- IStockPhoto

AngularJS is taking over the robust development around the world. There’s a good reason for that React-style framework that makes better developer and user experience on a website.

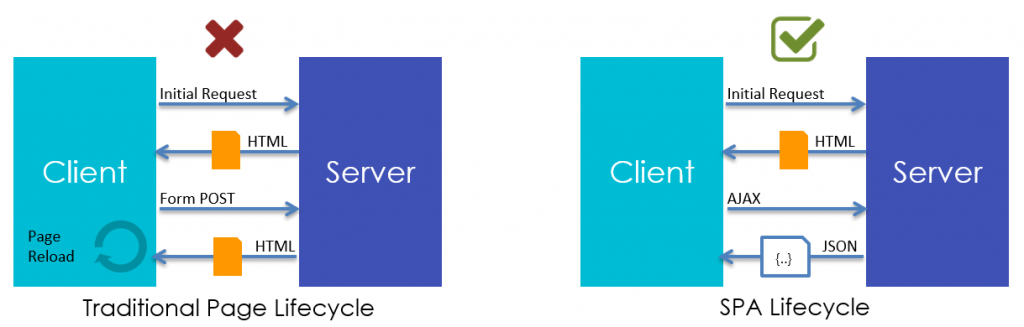

A quick recap, ReactJS, and AngularJS are the part of a web design methodology called single-page applications, or SPAs. While a conventional website loads each individual page on the request when the user navigates through the site, which includes rendering the page from the resources called from the server and cache,

On the other hand, Single-page applications load the entire site when the user first lands on a page. Instead of calling the resources on each click, the site dynamically updates a single HTML page.

ReactJS and AngularJS use advanced Javascript for rendering the page, which increases the user experience with faster loading of the web pages. All the website loading activities are taken care behind the screen.

Sadly, in Angular and React based applications, these activities are hidden from users as well as from web crawlers. Web crawlers rely on HTML data for interpreting and rendering the content of a web page. When these HTML/CSS resources are hidden within the scripts, crawlers have no content to crawl, index, and present in search results.

Though, Google states that they can crawl Javascript (and SEOs have tested and supported ), but even if that is valid, web crawlers still strive to crawl sites built on a SPA framework.

Apparently, one of the other issues is capturing Google Analytics data. Analytics data tracks the data by capturing page views each time a user clicks and navigates to a page. How would it be possible to track a website that doesn’t have an HTML response to trigger a pageview?

Using this process below, one can enable SPA websites not only to get easily indexed but also to rank them on the first page for keywords.

Steps to do SEO for Single Page Applications

- Create a list of all pages on the website

- Install Prerender

- “Fetch as Google” using GSC

- Configure Google Analytics

- Recrawl the site

1) Create a list of all pages on your site

Though it might sound tedious, however, this is the basic step you need to perform to get all the pages on your website. You can use XML sitemap of the website to get the list of all the URLs on the website.

You can refer to the master list of products (in the case of an e-commerce website) or probably create a directory and sub-directory structure to get all the web pages on the website.

2) Install Prerender

Prerender (free for up to 250 pages in a website) is a tool which will render the web page in a basic browser which will render the static HTML content from the web page to web crawlers. From an SEO viewpoint, Prerender will help search engine crawlers to identify indexable content.

Using the list created in step 1, you can decide upon which of the pages should be produced to search engines for crawling. I suggest that all of your important pages (SEO targeted pages) should be included.

Note: Prerender will be expensive for larger websites. If you have development capabilities, you can create a similar tool using PhantomJS or SlimerJS.

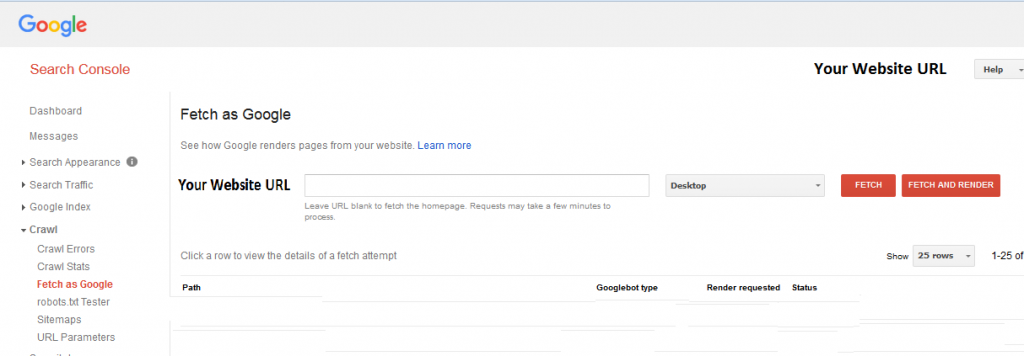

3) “Fetch as Google” using Google Search Console

The Fetch as Google tool enables you to test how Google crawls or renders a URL on your website. You can use Fetch as Google to see the HTTP response and how it renders the page. Also, it will provide you a screenshot of how GoogleBot rendered the page and how a visitor would see it.

For some of the pages, even with Prerender installed, you will find that Google is still partially displaying your website. With Fetch as Google, you will actually know what further needs to be optimized.

4) Configure Google Analytics (or Google Tag Manager)

As I have mentioned above, SPAs will have tracking issues in Google Analytics; you would need to install another plugin called Angulartics plugin which will replace the standard page view events with virtual pageview tracking.

Here the virtual pageviews are captured based on user journey and interactions with the website.

5) Recrawl the site

After working through steps 1 to 4, you should crawl the website on your own. Crawling the website will discover new issues on the website which Google crawler wouldn’t anticipate. Though a tedious task, you must go through each and every URL of the website making sure that you have got all the URLs indexed.

Results

Following the above five steps, you can achieve better indexing of your website pages. Better indexing results into better search engine visibility and rankings.

Within a few months of this implementation, you will find a surge in organic traffic to your website.

I agree that SEO for single page applications is a tedious job. However, it is not something impossible.

PS: An alternate way that people generally take is keeping the SPA part of the website separate from the marketing website. An example could be creating all the screens using SPA after a person logs into the application. This way, it becomes a lot easier to manage all your SEO and marketing efforts.

Read Further on AngularJS Series

- AngularJS : A “Write Less Do More” JavaScript Framework

- AngularJS : Updating a Label Dynamically with User Input

- AngularJS : Adding Items to a JavaScript List and updating corresponding DOM Dynamically

- AngularJS : Text Suggestions

- AngularJS : Sorting objects on various attributes

- AngularJS : Fetching data from the server

- AngularJS : Multiple Views, Layout Template and Routing

- AngularJS : Implementing Routes And Multiple Views

- Building Intuitive Frontend Interfaces with AngularJS

Ankit,

You’ve outdone yourself this time.

This is probably the best, most concise step-by-step guide I’ve ever seen on how to build a strong blog.

Thanks for the appreciation Jessica. I hope this helped you!

Excellent, very informative

Very informative & beautifully explained!

Thanks Punkaj